pFedLVM: A Large Vision Model -Driven and Latent Feature-Based Personalized Federated Learning Framework in Autonomous Driving

Paper and Code

May 07, 2024

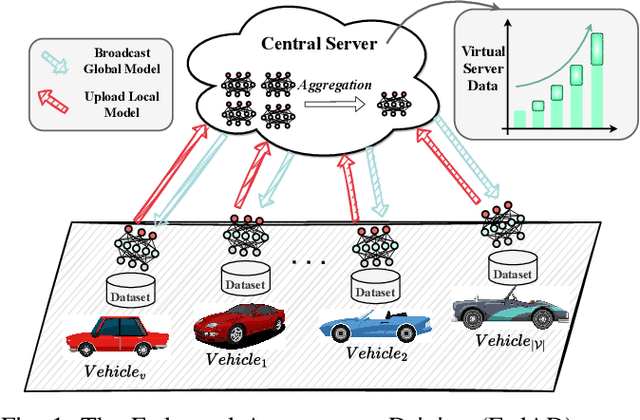

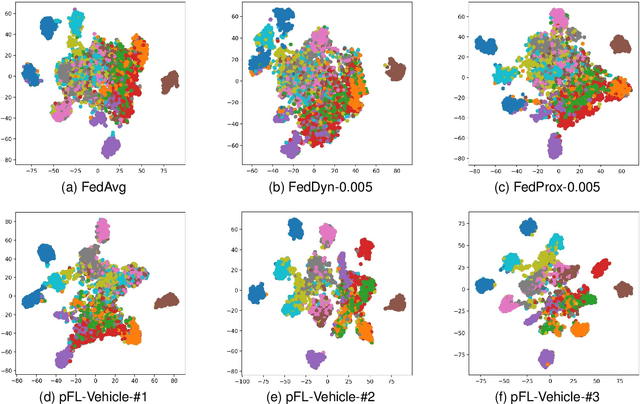

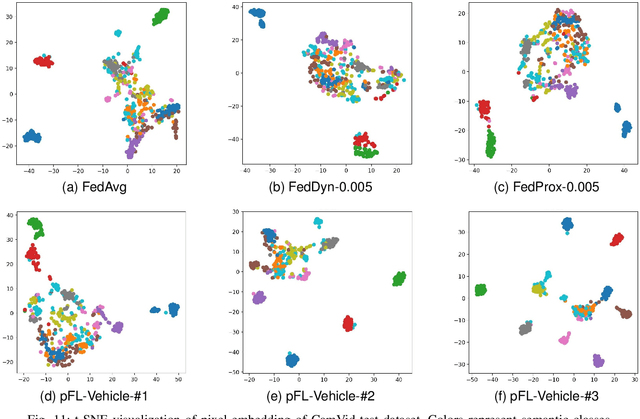

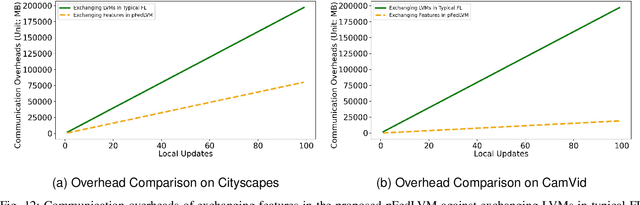

Deep learning-based Autonomous Driving (AD) models often exhibit poor generalization due to data heterogeneity in an ever domain-shifting environment. While Federated Learning (FL) could improve the generalization of an AD model (known as FedAD system), conventional models often struggle with under-fitting as the amount of accumulated training data progressively increases. To address this issue, instead of conventional small models, employing Large Vision Models (LVMs) in FedAD is a viable option for better learning of representations from a vast volume of data. However, implementing LVMs in FedAD introduces three challenges: (I) the extremely high communication overheads associated with transmitting LVMs between participating vehicles and a central server; (II) lack of computing resource to deploy LVMs on each vehicle; (III) the performance drop due to LVM focusing on shared features but overlooking local vehicle characteristics. To overcome these challenges, we propose pFedLVM, a LVM-Driven, Latent Feature-Based Personalized Federated Learning framework. In this approach, the LVM is deployed only on central server, which effectively alleviates the computational burden on individual vehicles. Furthermore, the exchange between central server and vehicles are the learned features rather than the LVM parameters, which significantly reduces communication overhead. In addition, we utilize both shared features from all participating vehicles and individual characteristics from each vehicle to establish a personalized learning mechanism. This enables each vehicle's model to learn features from others while preserving its personalized characteristics, thereby outperforming globally shared models trained in general FL. Extensive experiments demonstrate that pFedLVM outperforms the existing state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge