Performance Analysis of Out-of-Distribution Detection on Various Trained Neural Networks

Paper and Code

Mar 29, 2021

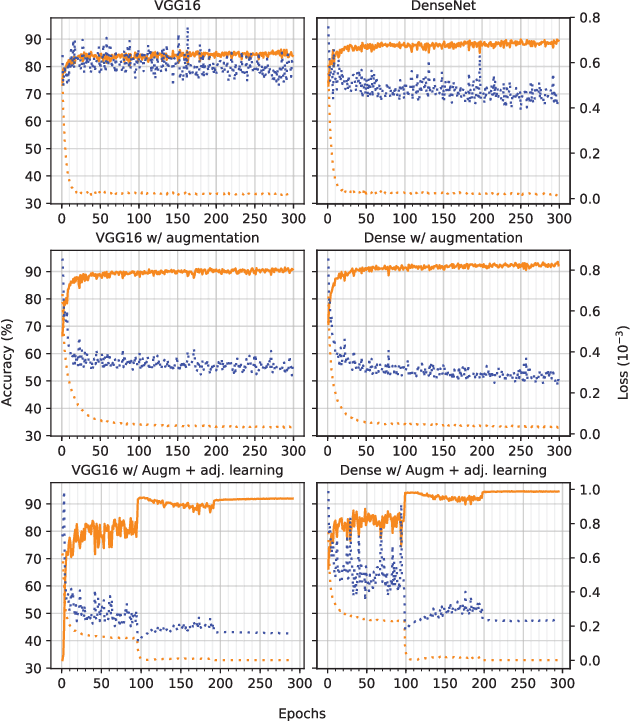

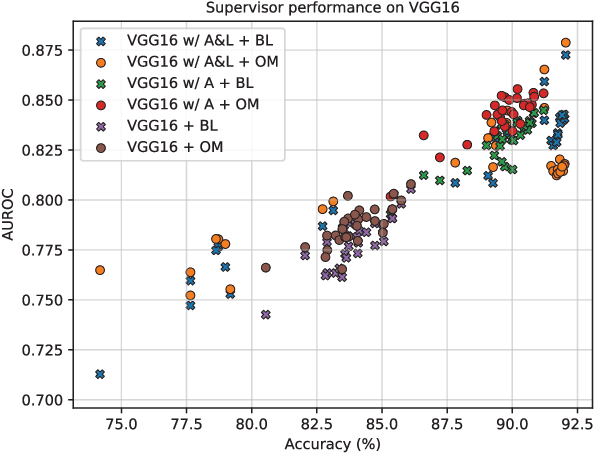

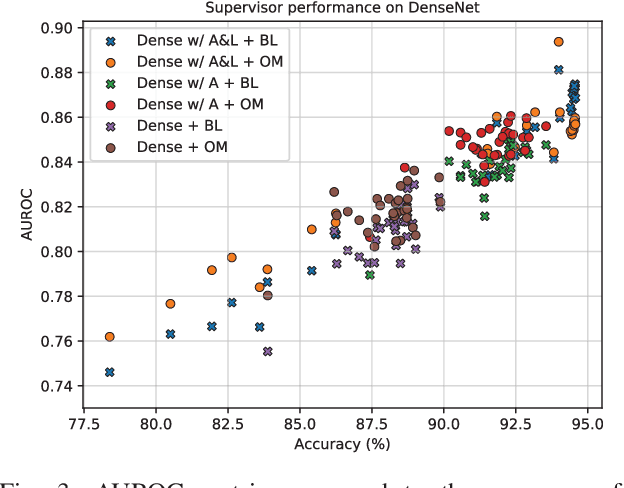

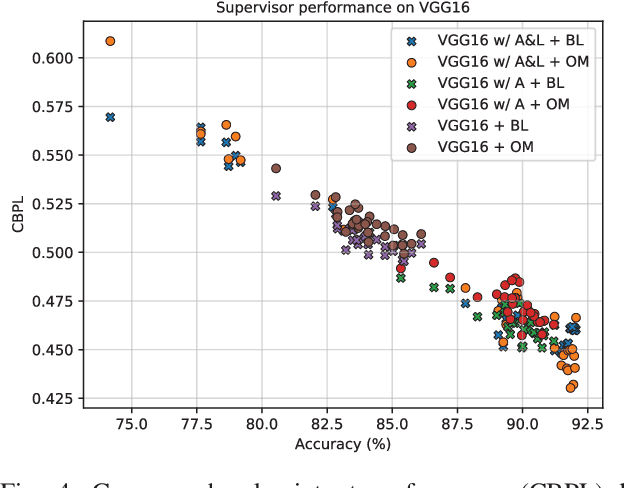

Several areas have been improved with Deep Learning during the past years. For non-safety related products adoption of AI and ML is not an issue, whereas in safety critical applications, robustness of such approaches is still an issue. A common challenge for Deep Neural Networks (DNN) occur when exposed to out-of-distribution samples that are previously unseen, where DNNs can yield high confidence predictions despite no prior knowledge of the input. In this paper we analyse two supervisors on two well-known DNNs with varied setups of training and find that the outlier detection performance improves with the quality of the training procedure. We analyse the performance of the supervisor after each epoch during the training cycle, to investigate supervisor performance as the accuracy converges. Understanding the relationship between training results and supervisor performance is valuable to improve robustness of the model and indicates where more work has to be done to create generalized models for safety critical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge