Path Design and Resource Management for NOMA enhanced Indoor Intelligent Robots

Paper and Code

Nov 26, 2020

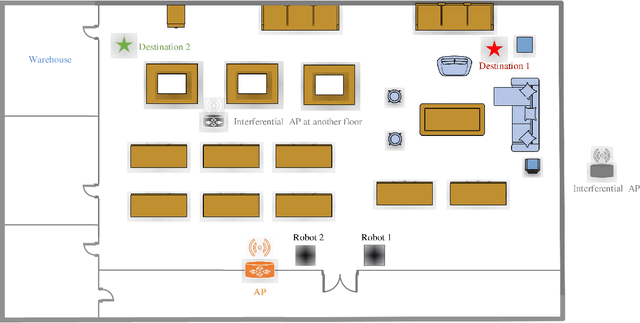

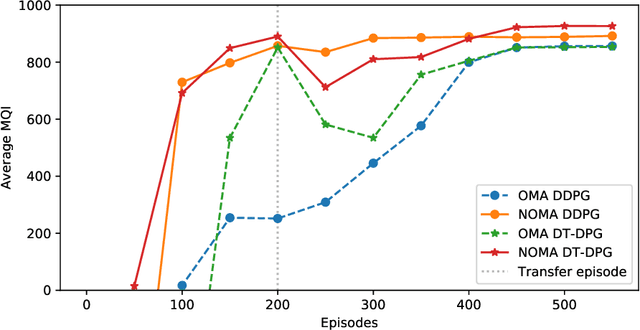

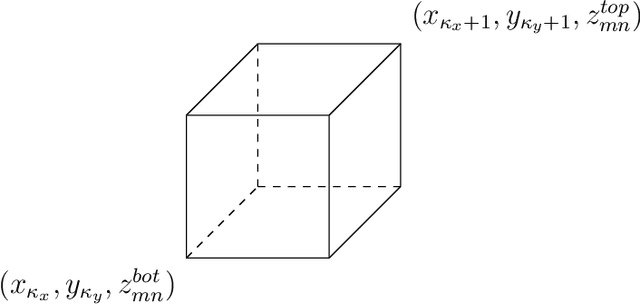

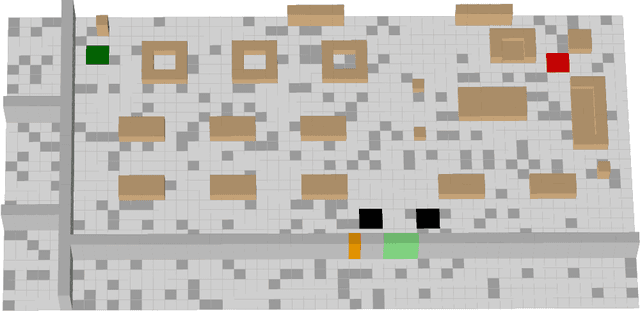

A communication enabled indoor intelligent robots (IRs) service framework is proposed, where non-orthogonal multiple access (NOMA) technique is adopted to enable highly reliable communications. In cooperation with the ultramodern indoor channel model recently proposed by the International Telecommunication Union (ITU), the Lego modeling method is proposed, which can deterministically describe the indoor layout and channel state in order to construct the radio map. The investigated radio map is invoked as a virtual environment to train the reinforcement learning agent, which can save training time and hardware costs. Build on the proposed communication model, motions of IRs who need to reach designated mission destinations and their corresponding down-link power allocation policy are jointly optimized to maximize the mission efficiency and communication reliability of IRs. In an effort to solve this optimization problem, a novel reinforcement learning approach named deep transfer deterministic policy gradient (DT-DPG) algorithm is proposed. Our simulation results demonstrate that 1) With the aid of NOMA techniques, the communication reliability of IRs is effectively improved; 2) The radio map is qualified to be a virtual training environment, and its statistical channel state information improves training efficiency by about 30%; 3) The proposed DT-DPG algorithm is superior to the conventional deep deterministic policy gradient (DDPG) algorithm in terms of optimization performance, training time, and anti-local optimum ability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge