OPTION: OPTImization Algorithm Benchmarking ONtology

Paper and Code

Nov 21, 2022

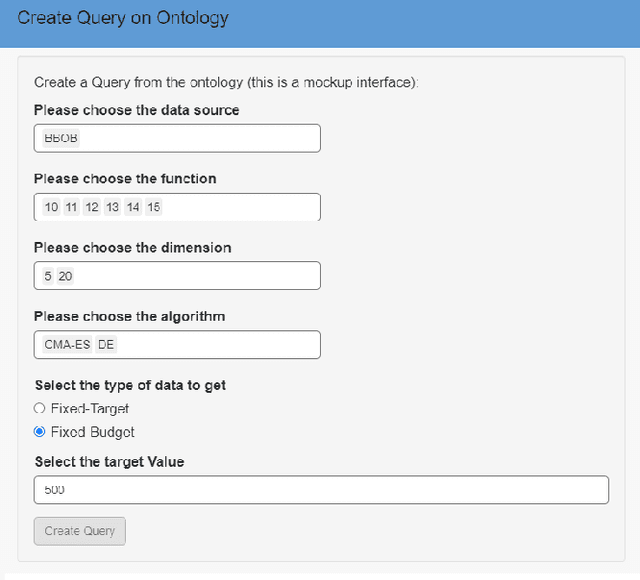

Many optimization algorithm benchmarking platforms allow users to share their experimental data to promote reproducible and reusable research. However, different platforms use different data models and formats, which drastically complicates the identification of relevant datasets, their interpretation, and their interoperability. Therefore, a semantically rich, ontology-based, machine-readable data model that can be used by different platforms is highly desirable. In this paper, we report on the development of such an ontology, which we call OPTION (OPTImization algorithm benchmarking ONtology). Our ontology provides the vocabulary needed for semantic annotation of the core entities involved in the benchmarking process, such as algorithms, problems, and evaluation measures. It also provides means for automatic data integration, improved interoperability, and powerful querying capabilities, thereby increasing the value of the benchmarking data. We demonstrate the utility of OPTION, by annotating and querying a corpus of benchmark performance data from the BBOB collection of the COCO framework and from the Yet Another Black-Box Optimization Benchmark (YABBOB) family of the Nevergrad environment. In addition, we integrate features of the BBOB functional performance landscape into the OPTION knowledge base using publicly available datasets with exploratory landscape analysis. Finally, we integrate the OPTION knowledge base into the IOHprofiler environment and provide users with the ability to perform meta-analysis of performance data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge