One-shot action recognition towards novel assistive therapies

Paper and Code

Feb 17, 2021

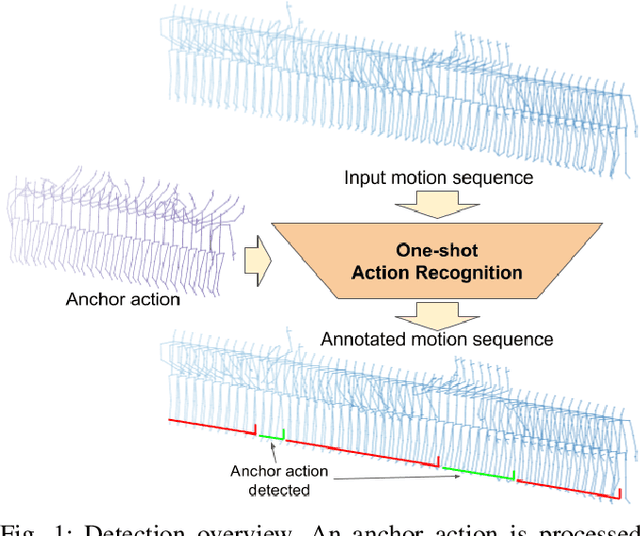

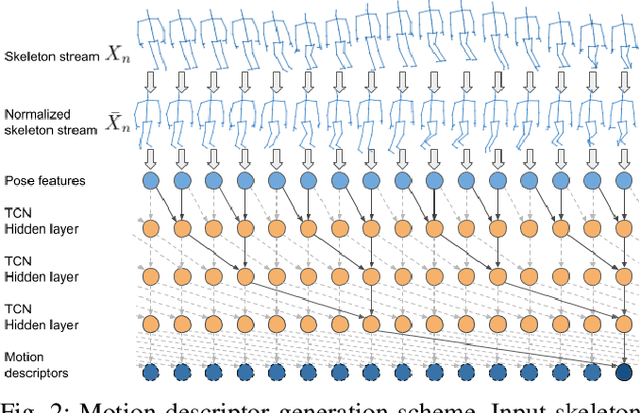

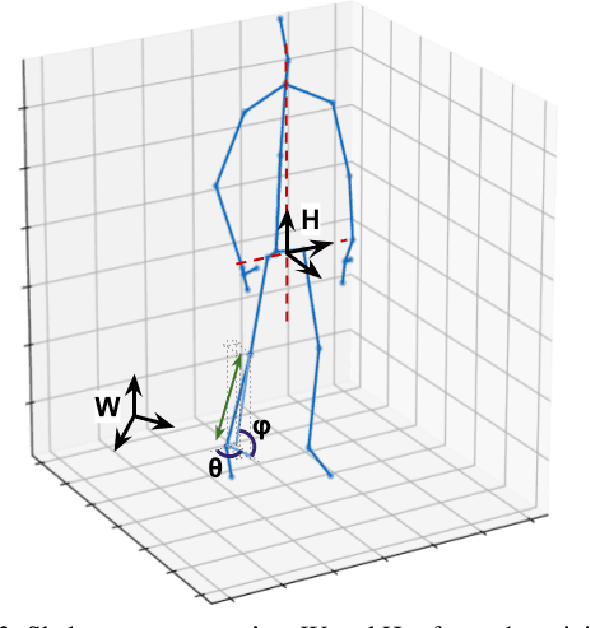

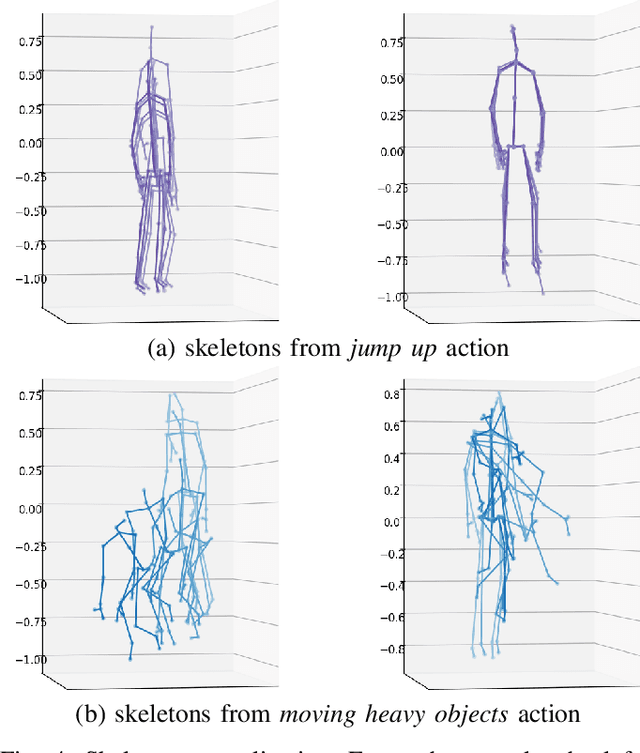

One-shot action recognition is a challenging problem, especially when the target video can contain one, more or none repetitions of the target action. Solutions to this problem can be used in many real world applications that require automated processing of activity videos. In particular, this work is motivated by the automated analysis of medical therapies that involve action imitation games. The presented approach incorporates a pre-processing step that standardizes heterogeneous motion data conditions and generates descriptive movement representations with a Temporal Convolutional Network for a final one-shot (or few-shot) action recognition. Our method achieves state-of-the-art results on the public NTU-120 one-shot action recognition challenge. Besides, we evaluate the approach on a real use-case of automated video analysis for therapy support with autistic people. The promising results prove its suitability for this kind of application in the wild, providing both quantitative and qualitative measures, essential for the patient evaluation and monitoring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge