Omni-AD: Learning to Reconstruct Global and Local Features for Multi-class Anomaly Detection

Paper and Code

Mar 27, 2025

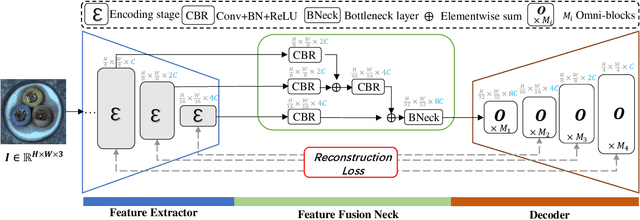

In multi-class unsupervised anomaly detection(MUAD), reconstruction-based methods learn to map input images to normal patterns to identify anomalous pixels. However, this strategy easily falls into the well-known "learning shortcut" issue when decoders fail to capture normal patterns and reconstruct both normal and abnormal samples naively. To address that, we propose to learn the input features in global and local manners, forcing the network to memorize the normal patterns more comprehensively. Specifically, we design a two-branch decoder block, named Omni-block. One branch corresponds to global feature learning, where we serialize two self-attention blocks but replace the query and (key, value) with learnable tokens, respectively, thus capturing global features of normal patterns concisely and thoroughly. The local branch comprises depth-separable convolutions, whose locality enables effective and efficient learning of local features for normal patterns. By stacking Omni-blocks, we build a framework, Omni-AD, to learn normal patterns of different granularity and reconstruct them progressively. Comprehensive experiments on public anomaly detection benchmarks show that our method outperforms state-of-the-art approaches in MUAD. Code is available at https://github.com/easyoo/Omni-AD.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge