Neuromorphic Eye-in-Hand Visual Servoing

Paper and Code

Apr 15, 2020

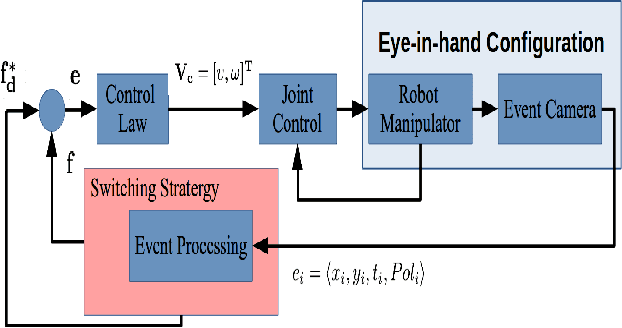

Robotic vision plays a major role in factory automation to service robot applications. However, the traditional use of frame-based camera sets a limitation on continuous visual feedback due to their low sampling rate and redundant data in real-time image processing, especially in the case of high-speed tasks. Event cameras give human-like vision capabilities such as observing the dynamic changes asynchronously at a high temporal resolution ($1\mu s$) with low latency and wide dynamic range. In this paper, we present a visual servoing method using an event camera and a switching control strategy to explore, reach and grasp to achieve a manipulation task. We devise three surface layers of active events to directly process stream of events from relative motion. A purely event based approach is adopted to extract corner features, localize them robustly using heat maps and generate virtual features for tracking and alignment. Based on the visual feedback, the motion of the robot is controlled to make the temporal upcoming event features converge to the desired event in spatio-temporal space. The controller switches its strategy based on the sequence of operation to establish a stable grasp. The event based visual servoing (EVBS) method is validated experimentally using a commercial robot manipulator in an eye-in-hand configuration. Experiments prove the effectiveness of the EBVS method to track and grasp objects of different shapes without the need for re-tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge