Neural Stethoscopes: Unifying Analytic, Auxiliary and Adversarial Network Probing

Paper and Code

Jun 15, 2018

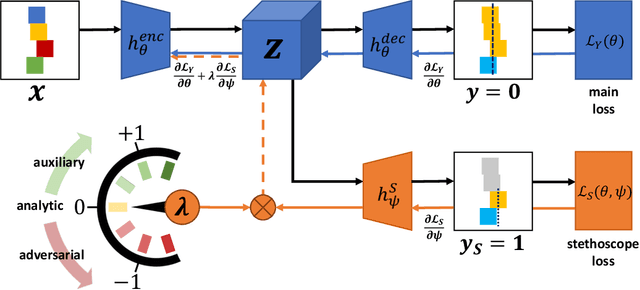

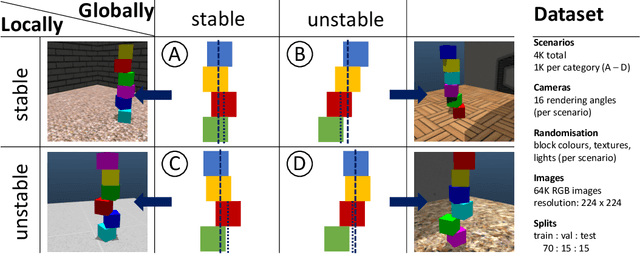

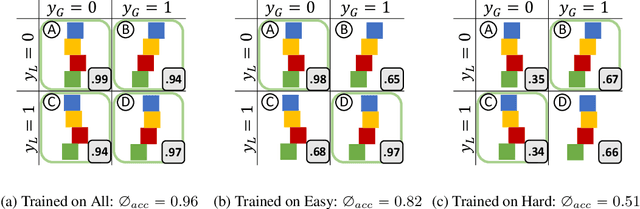

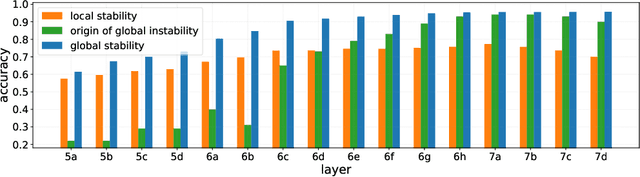

Model interpretability and systematic, targeted model adaptation present central tenets in machine learning for addressing limited or biased datasets. In this paper, we introduce neural stethoscopes as a framework for quantifying the degree of importance of specific factors of influence in deep networks as well as for actively promoting and suppressing information as appropriate. In doing so we unify concepts from multitask learning as well as training with auxiliary and adversarial losses. We showcase the efficacy of neural stethoscopes in an intuitive physics domain. Specifically, we investigate the challenge of visually predicting stability of block towers and demonstrate that the network uses visual cues which makes it susceptible to biases in the dataset. Through the use of stethoscopes we interrogate the accessibility of specific information throughout the network stack and show that we are able to actively de-bias network predictions as well as enhance performance via suitable auxiliary and adversarial stethoscope losses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge