Multi-Slice Net: A novel light weight framework for COVID-19 Diagnosis

Paper and Code

Aug 09, 2021

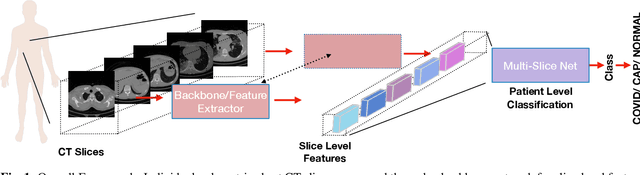

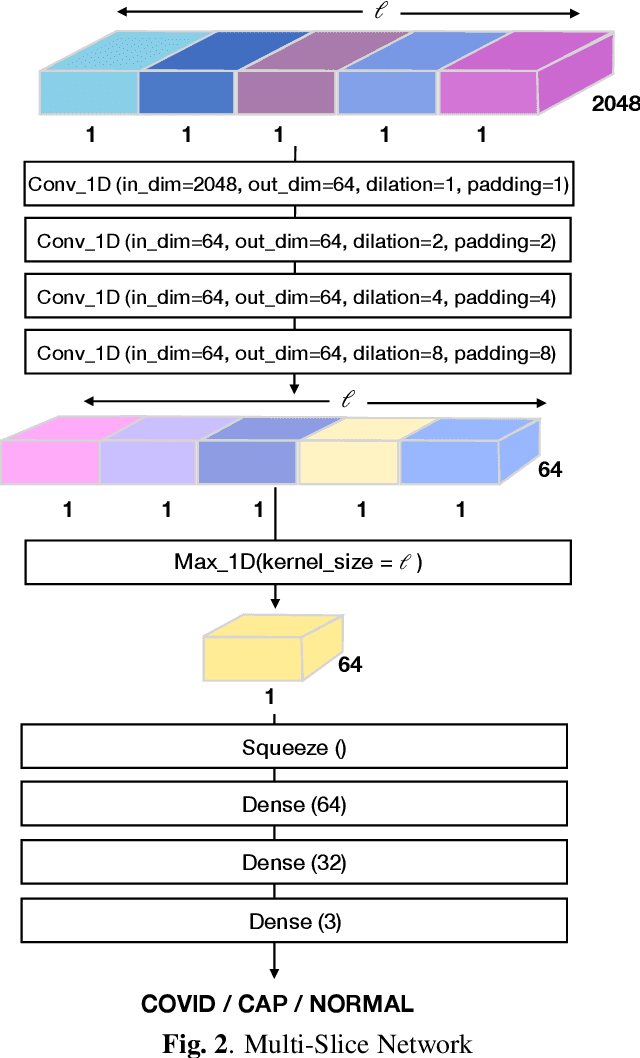

This paper presents a novel lightweight COVID-19 diagnosis framework using CT scans. Our system utilises a novel two-stage approach to generate robust and efficient diagnoses across heterogeneous patient level inputs. We use a powerful backbone network as a feature extractor to capture discriminative slice-level features. These features are aggregated by a lightweight network to obtain a patient level diagnosis. The aggregation network is carefully designed to have a small number of trainable parameters while also possessing sufficient capacity to generalise to diverse variations within different CT volumes and to adapt to noise introduced during the data acquisition. We achieve a significant performance increase over the baselines when benchmarked on the SPGC COVID-19 Radiomics Dataset, despite having only 2.5 million trainable parameters and requiring only 0.623 seconds on average to process a single patient's CT volume using an Nvidia-GeForce RTX 2080 GPU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge