Monocular Vision-based Vehicle Localization Aided by Fine-grained Classification

Paper and Code

Apr 21, 2018

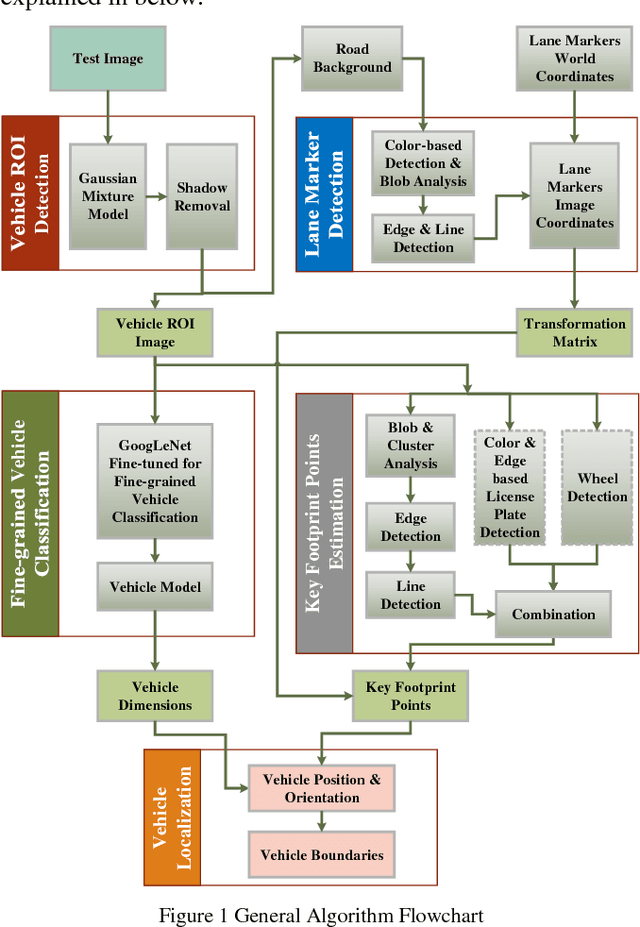

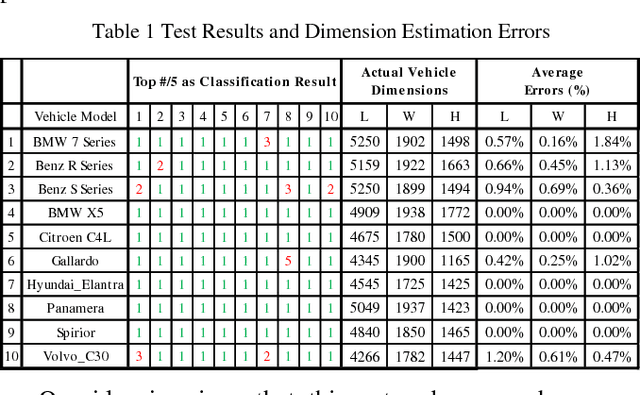

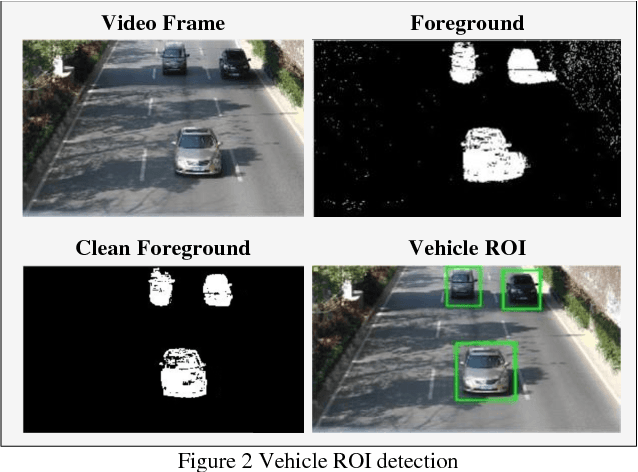

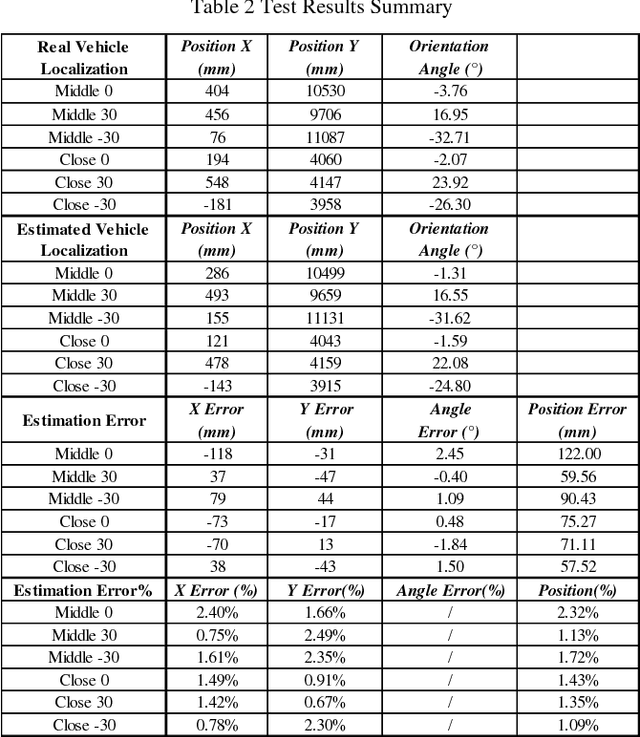

Monocular camera systems are prevailing in intelligent transportation systems, but by far they have rarely been used for dimensional purposes such as to accurately estimate the localization information of a vehicle. In this paper, we show that this capability can be realized. By integrating a series of advanced computer vision techniques including foreground extraction, edge and line detection, etc., and by utilizing deep learning networks for fine-grained vehicle model classification, we developed an algorithm which can estimate vehicles location (position, orientation and boundaries) within the environment down to 3.79 percent position accuracy and 2.5 degrees orientation accuracy. With this enhancement, current massive surveillance camera systems can potentially play the role of e-traffic police and trigger many new intelligent transportation applications, for example, to guide vehicles for parking or even for autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge