Meta-KD: A Meta Knowledge Distillation Framework for Language Model Compression across Domains

Paper and Code

Dec 02, 2020

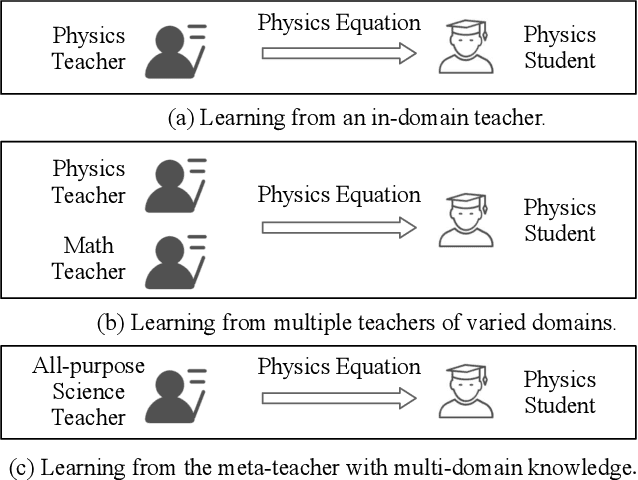

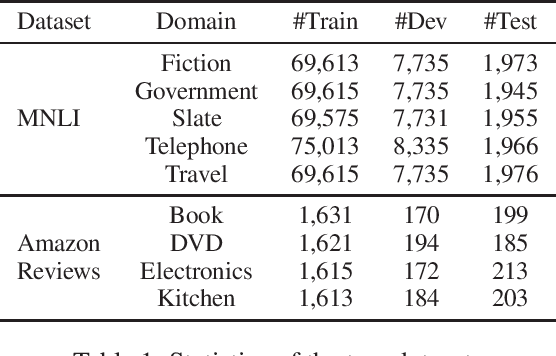

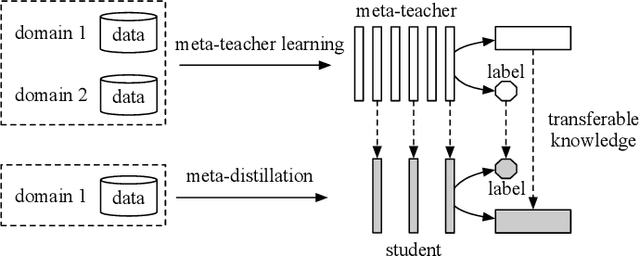

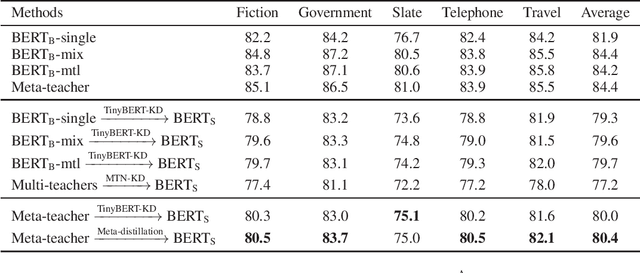

Pre-trained language models have been applied to various NLP tasks with considerable performance gains. However, the large model sizes, together with the long inference time, limit the deployment of such models in real-time applications. Typical approaches consider knowledge distillation to distill large teacher models into small student models. However, most of these studies focus on single-domain only, which ignores the transferable knowledge from other domains. We argue that training a teacher with transferable knowledge digested across domains can achieve better generalization capability to help knowledge distillation. To this end, we propose a Meta-Knowledge Distillation (Meta-KD) framework to build a meta-teacher model that captures transferable knowledge across domains inspired by meta-learning and use it to pass knowledge to students. Specifically, we first leverage a cross-domain learning process to train the meta-teacher on multiple domains, and then propose a meta-distillation algorithm to learn single-domain student models with guidance from the meta-teacher. Experiments on two public multi-domain NLP tasks show the effectiveness and superiority of the proposed Meta-KD framework. We also demonstrate the capability of Meta-KD in both few-shot and zero-shot learning settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge