Meta Adaptive Neural Ranking with Contrastive Synthetic Supervision

Paper and Code

Dec 29, 2020

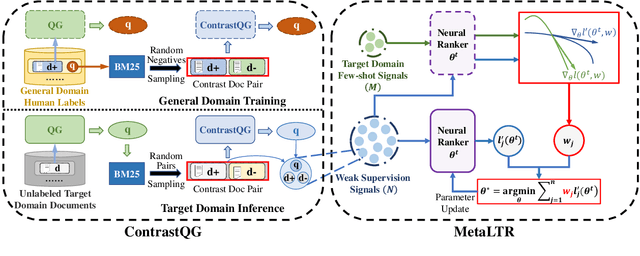

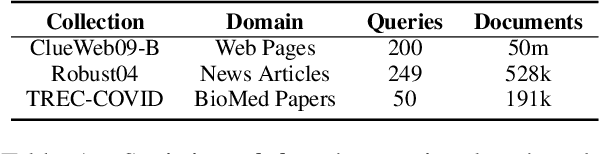

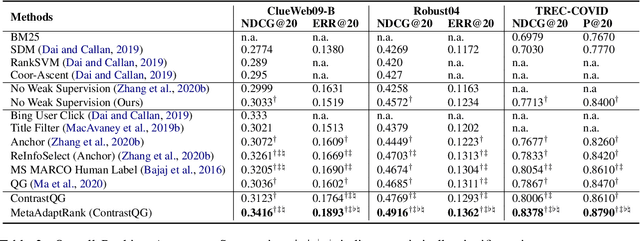

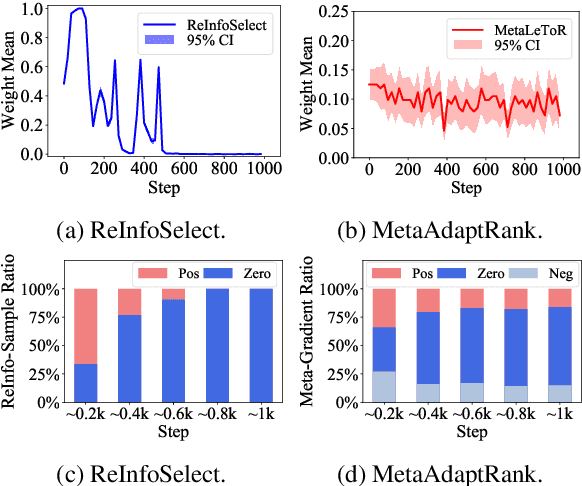

Neural Information Retrieval (Neu-IR) models have shown their effectiveness and thrive from end-to-end training with massive high-quality relevance labels. Nevertheless, relevance labels at such quantity are luxury and unavailable in many ranking scenarios, for example, in biomedical search. This paper improves Neu-IR in such few-shot search scenarios by meta-adaptively training neural rankers with synthetic weak supervision. We first leverage contrastive query generation (ContrastQG) to synthesize more informative queries as in-domain weak relevance labels, and then filter them with meta adaptive learning to rank (MetaLTR) to better generalize neural rankers to the target few-shot domain. Experiments on three different search domains: web, news, and biomedical, demonstrate significantly improved few-shot accuracy of neural rankers with our weak supervision framework. The code of this paper will be open-sourced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge