LINDT: Tackling Negative Federated Learning with Local Adaptation

Paper and Code

Nov 23, 2020

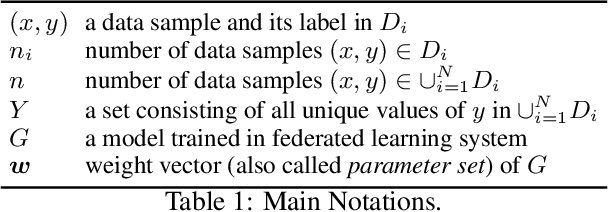

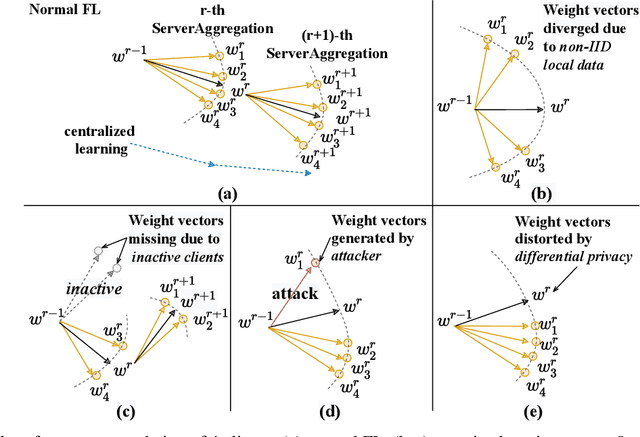

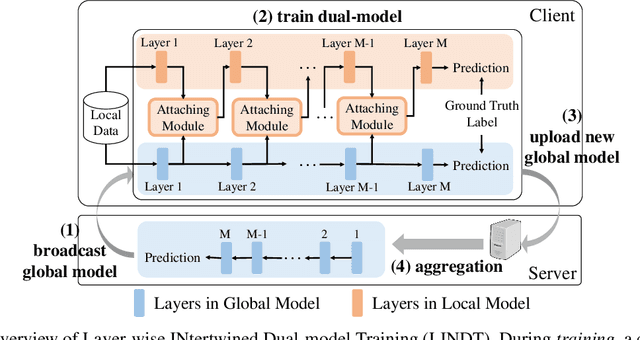

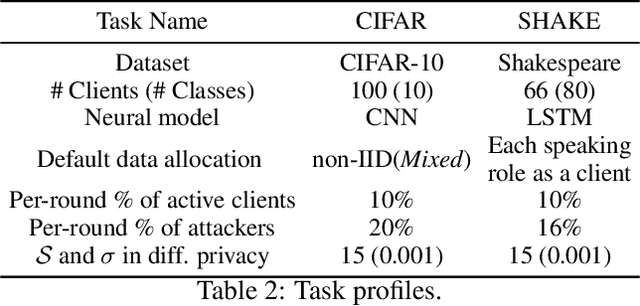

Federated Learning (FL) is a promising distributed learning paradigm, which allows a number of data owners (also called clients) to collaboratively learn a shared model without disclosing each client's data. However, FL may fail to proceed properly, amid a state that we call negative federated learning (NFL). This paper addresses the problem of negative federated learning. We formulate a rigorous definition of NFL and analyze its essential cause. We propose a novel framework called LINDT for tackling NFL in run-time. The framework can potentially work with any neural-network-based FL systems for NFL detection and recovery. Specifically, we introduce a metric for detecting NFL from the server. On occasion of NFL recovery, the framework makes adaptation to the federated model on each client's local data by learning a Layer-wise Intertwined Dual-model. Experiment results show that the proposed approach can significantly improve the performance of FL on local data in various scenarios of NFL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge