LIMOT: A Tightly-Coupled System for LiDAR-Inertial Odometry and Multi-Object Tracking

Paper and Code

Apr 30, 2023

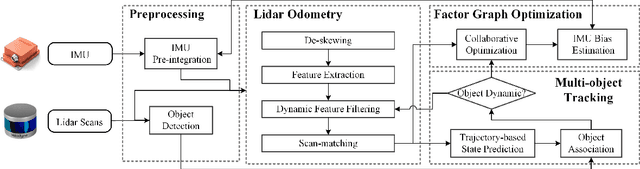

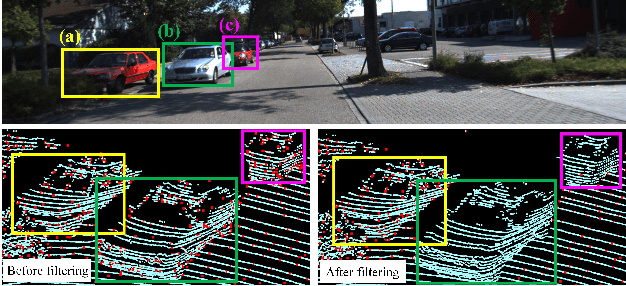

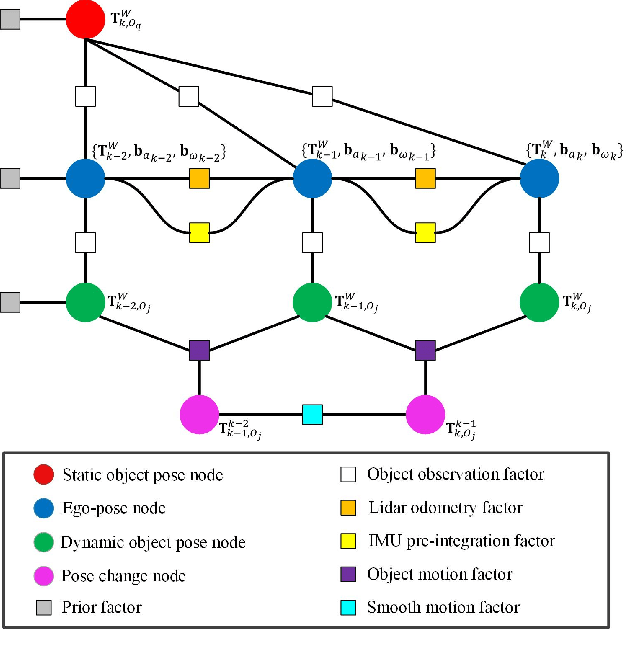

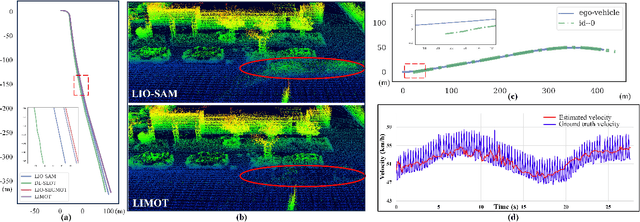

Simultaneous localization and mapping (SLAM) is critical to the implementation of autonomous driving. Most LiDAR-inertial SLAM algorithms assume a static environment, leading to unreliable localization in dynamic environments. Furthermore, accurate tracking of moving objects is of great significance for the control and planning of autonomous vehicle operation. This study proposes LIMOT, a tightly-coupled multi-object tracking and LiDAR-inertial SLAM system capable of accurately estimating the poses of both ego-vehicle and objects. First, we use 3D bounding boxes generated by an object detector to represent all movable objects and perform LiDAR odometry using inertial measurement unit (IMU) pre-integration result. Based on the historical trajectories of tracked objects in a sliding window, we perform robust object association. We propose a trajectory-based dynamic feature filtering method, which filters out features belonging to moving objects by leveraging tracking results. Factor graph-based optimization is then conducted to optimize the bias of the IMU and the poses of both the ego-vehicle and surrounding objects in a sliding window. Experiments conducted on KITTI datasets show that our method achieves better pose and tracking accuracy than our previous work DL-SLOT and other SLAM and multi-object tracking baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge