LiDAR point-cloud processing based on projection methods: a comparison

Paper and Code

Aug 03, 2020

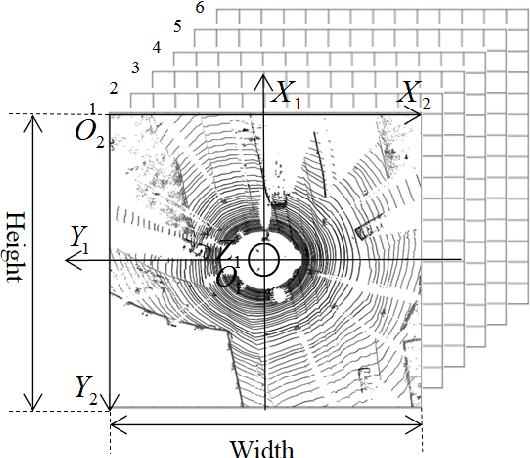

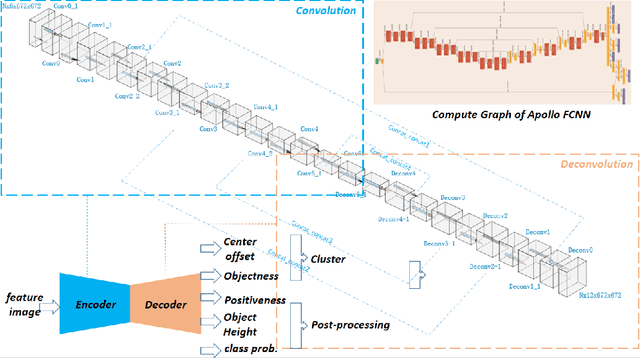

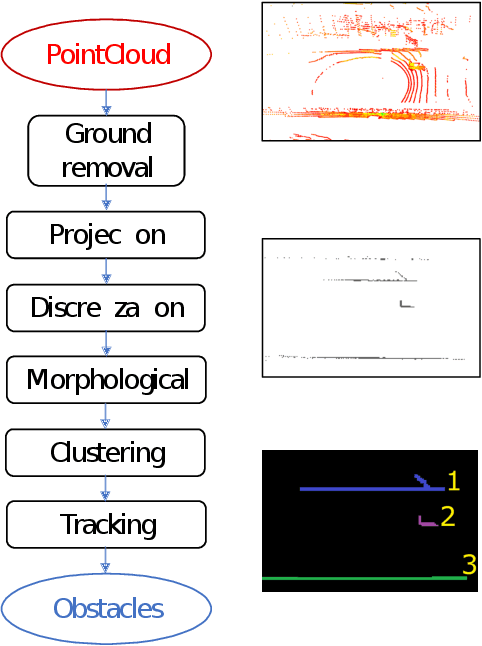

An accurate and rapid-response perception system is fundamental for autonomous vehicles to operate safely. 3D object detection methods handle point clouds given by LiDAR sensors to provide accurate depth and position information for each detection, together with its dimensions and classification. The information is then used to track vehicles and other obstacles in the surroundings of the autonomous vehicle, and also to feed control units that guarantee collision avoidance and motion planning. Nowadays, object detection systems can be divided into two main categories. The first ones are the geometric based, which retrieve the obstacles using geometric and morphological operations on the 3D points. The seconds are the deep learning-based, which process the 3D points, or an elaboration of the 3D point-cloud, with deep learning techniques to retrieve a set of obstacles. This paper presents a comparison between those two approaches, presenting one implementation of each class on a real autonomous vehicle. Accuracy of the estimates of the algorithms has been evaluated with experimental tests carried in the Monza ENI circuit. The position of the ego vehicle and the obstacle is given by GPS sensors with RTK correction, which guarantees an accurate ground truth for the comparison. Both algorithms have been implemented on ROS and run on a consumer laptop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge