Learning to Predict with Supporting Evidence: Applications to Clinical Risk Prediction

Paper and Code

Mar 04, 2021

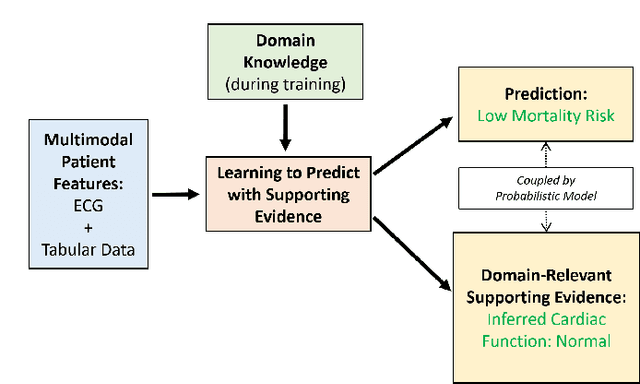

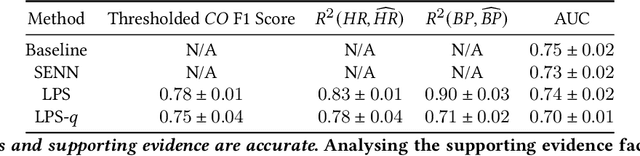

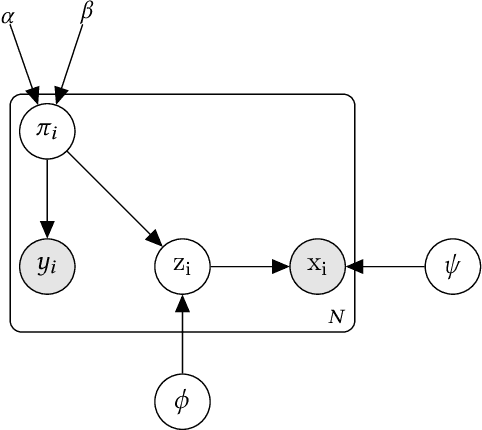

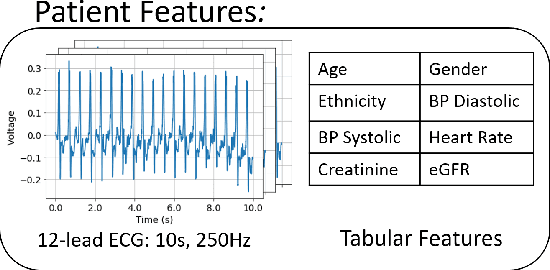

The impact of machine learning models on healthcare will depend on the degree of trust that healthcare professionals place in the predictions made by these models. In this paper, we present a method to provide people with clinical expertise with domain-relevant evidence about why a prediction should be trusted. We first design a probabilistic model that relates meaningful latent concepts to prediction targets and observed data. Inference of latent variables in this model corresponds to both making a prediction and providing supporting evidence for that prediction. We present a two-step process to efficiently approximate inference: (i) estimating model parameters using variational learning, and (ii) approximating maximum a posteriori estimation of latent variables in the model using a neural network, trained with an objective derived from the probabilistic model. We demonstrate the method on the task of predicting mortality risk for patients with cardiovascular disease. Specifically, using electrocardiogram and tabular data as input, we show that our approach provides appropriate domain-relevant supporting evidence for accurate predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge