Learning Motion Priors for Efficient Video Object Detection

Paper and Code

Nov 13, 2019

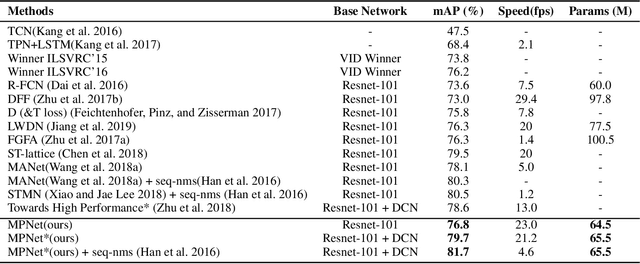

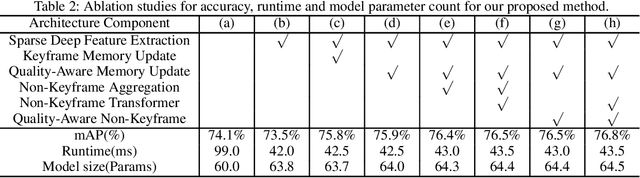

Convolution neural networks have achieved great progress on image object detection task. However, it is not trivial to transfer existing image object detection methods to the video domain since most of them are designed specifically for the image domain. Directly applying an image detector cannot achieve optimal results because of the lack of temporal information, which is vital for the video domain. Recently, image-level flow warping has been proposed to propagate features across different frames, aiming at achieving a better trade-off between accuracy and efficiency. However, the gap between image-level optical flow with high-level features can hinder the spatial propagation accuracy. To achieve a better trade-off between accuracy and efficiency, in this paper, we propose to learn motion priors for efficient video object detection. We first initialize some motion priors for each location and then use them to propagate features across frames. At the same time, Motion priors are updated adaptively to find better spatial correspondences. Without bells and whistles, the proposed framework achieves state-of-the-art performance on the ImageNet VID dataset with real-time speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge