Learning Large-scale Network Embedding from Representative Subgraph

Paper and Code

Dec 02, 2021

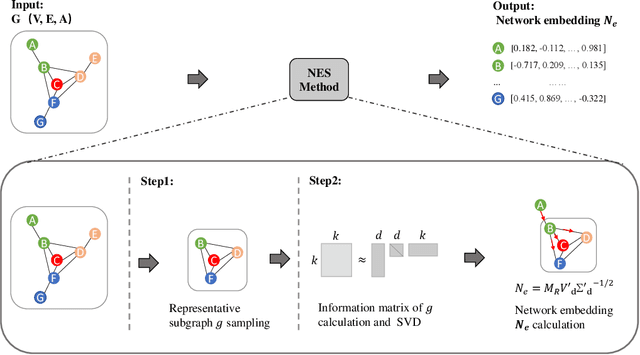

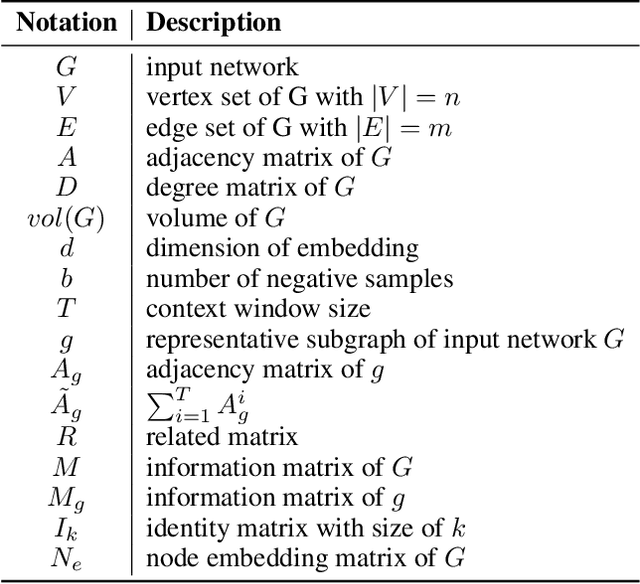

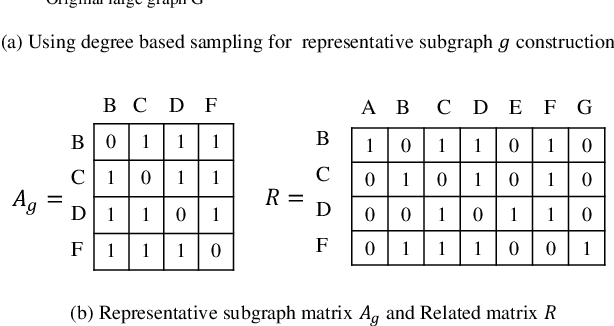

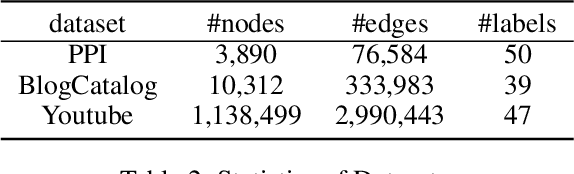

We study the problem of large-scale network embedding, which aims to learn low-dimensional latent representations for network mining applications. Recent research in the field of network embedding has led to significant progress such as DeepWalk, LINE, NetMF, NetSMF. However, the huge size of many real-world networks makes it computationally expensive to learn network embedding from the entire network. In this work, we present a novel network embedding method called "NES", which learns network embedding from a small representative subgraph. NES leverages theories from graph sampling to efficiently construct representative subgraph with smaller size which can be used to make inferences about the full network, enabling significantly improved efficiency in embedding learning. Then, NES computes the network embedding from this representative subgraph, efficiently. Compared with well-known methods, extensive experiments on networks of various scales and types demonstrate that NES achieves comparable performance and significant efficiency superiority.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge