Learning Fair Rule Lists

Paper and Code

Sep 09, 2019

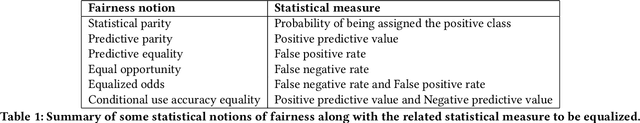

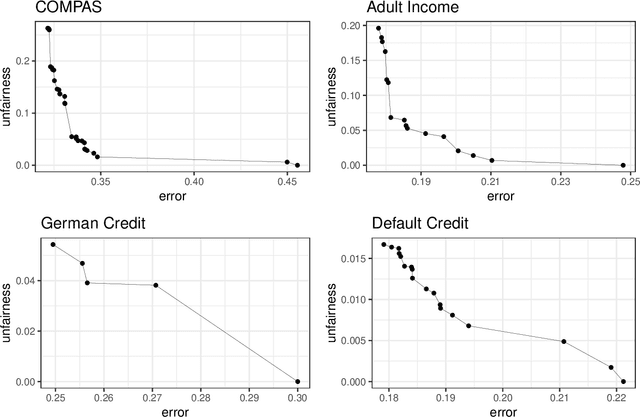

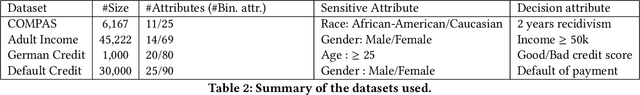

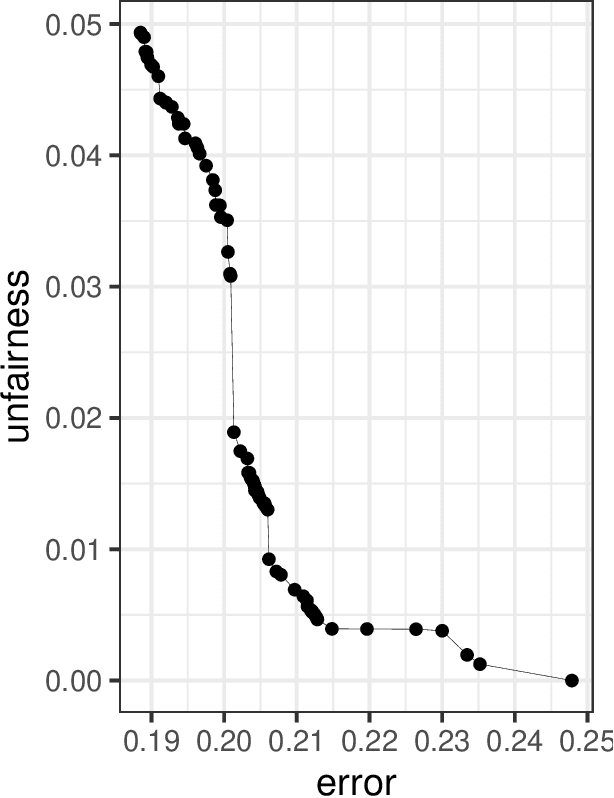

The widespread use of machine learning models, especially within the context of decision-making systems impacting individuals, raises many ethical issues with respect to fairness and interpretability of these models. While the research in these domains is booming, very few works have addressed these two issues simultaneously. To solve this shortcoming, we propose FairCORELS, a supervised learning algorithm whose objective is to learn at the same time fair and interpretable models. FairCORELS is a multi-objective variant of CORELS, a branch-and-bound algorithm, designed to compute accurate and interpretable rule lists. By jointly addressing fairness and interpretability, FairCORELS can achieve better fairness/accuracy tradeoffs compared to existing methods, as demonstrated by the empirical evaluation performed on real datasets. Our paper also contains additional contributions regarding the search strategies for optimizing the multi-objective function integrating both fairness, accuracy and interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge