Learn to Effectively Explore in Context-Based Meta-RL

Paper and Code

Jun 15, 2020

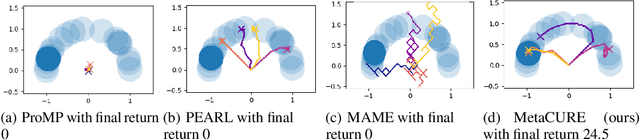

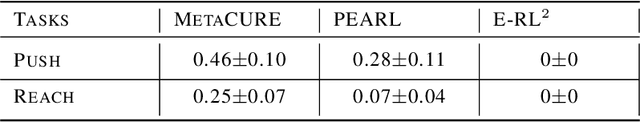

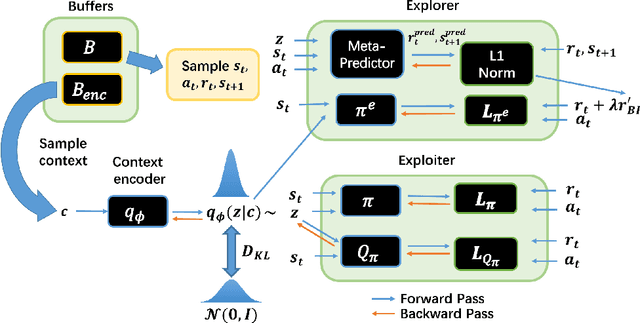

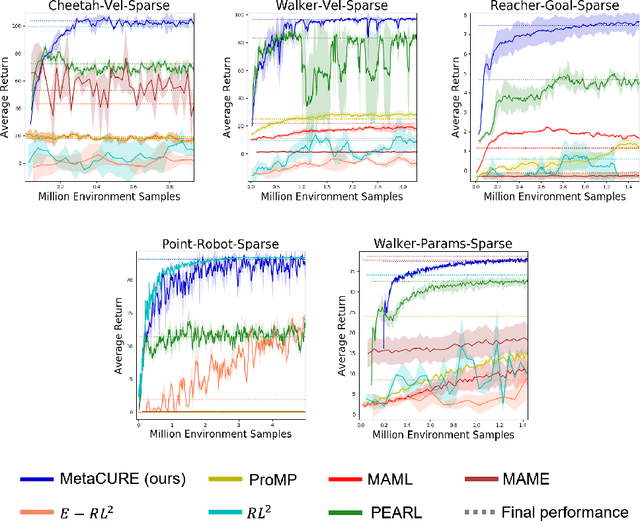

Meta reinforcement learning (meta-RL) provides a principled approach for fast adaptation to novel tasks by extracting prior knowledge from previous tasks. Under such settings, it is crucial for the agent to perform efficient exploration during adaptation to collect useful experiences. However, existing methods suffer from poor adaptation performance caused by inefficient exploration mechanisms, especially in sparse-reward problems. In this paper, we present a novel off-policy context-based meta-RL approach that efficiently learns a separate exploration policy to support fast adaptation, as well as a context-aware exploitation policy to maximize extrinsic return. The explorer is motivated by an information-theoretical intrinsic reward that encourages the agent to collect experiences that provide rich information about the task. Experiment results on both MuJoCo and Meta-World benchmarks show that our method significantly outperforms baselines by performing efficient exploration strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge