Is Q-Learning Minimax Optimal? A Tight Sample Complexity Analysis

Paper and Code

Mar 16, 2021

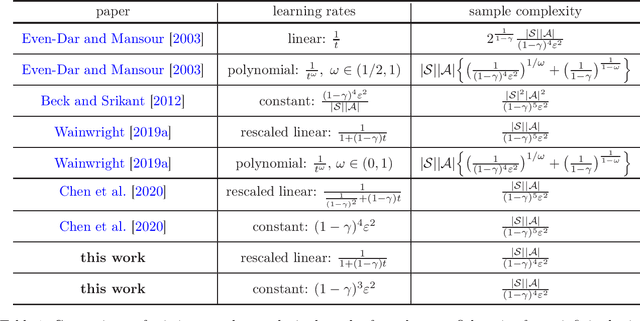

Q-learning, which seeks to learn the optimal Q-function of a Markov decision process (MDP) in a model-free fashion, lies at the heart of reinforcement learning. When it comes to the synchronous setting (such that independent samples for all state-action pairs are drawn from a generative model in each iteration), substantial progress has been made recently towards understanding the sample efficiency of Q-learning. Take a $\gamma$-discounted infinite-horizon MDP with state space $\mathcal{S}$ and action space $\mathcal{A}$: to yield an entrywise $\varepsilon$-accurate estimate of the optimal Q-function, state-of-the-art theory for Q-learning proves that a sample size on the order of $\frac{|\mathcal{S}||\mathcal{A}|}{(1-\gamma)^5\varepsilon^{2}}$ is sufficient, which, however, fails to match with the existing minimax lower bound. This gives rise to natural questions: what is the sharp sample complexity of Q-learning? Is Q-learning provably sub-optimal? In this work, we settle these questions by (1) demonstrating that the sample complexity of Q-learning is at most on the order of $\frac{|\mathcal{S}||\mathcal{A}|}{(1-\gamma)^4\varepsilon^2}$ (up to some log factor) for any $0<\varepsilon <1$, and (2) developing a matching lower bound to confirm the sharpness of our result. Our findings unveil both the effectiveness and limitation of Q-learning: its sample complexity matches that of speedy Q-learning without requiring extra computation and storage, albeit still being considerably higher than the minimax lower bound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge