Invenio: Discovering Hidden Relationships Between Tasks/Domains Using Structured Meta Learning

Paper and Code

Nov 24, 2019

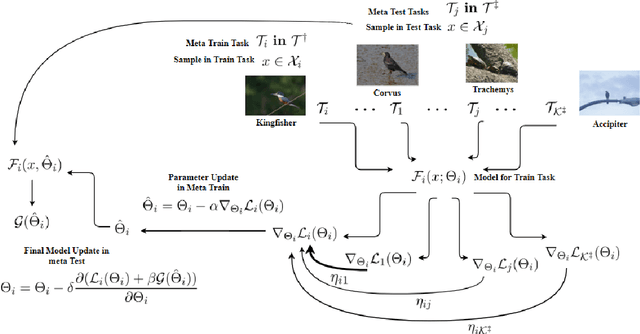

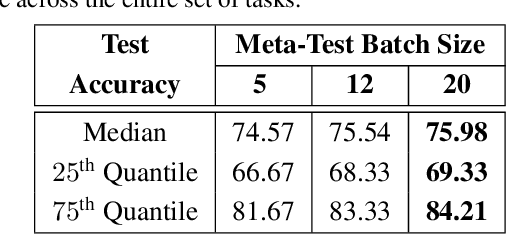

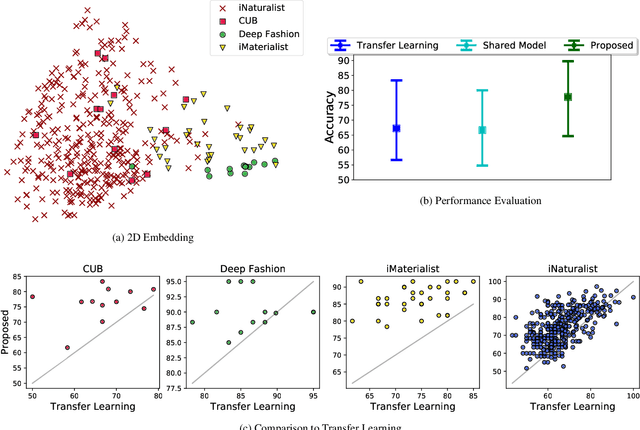

Exploiting known semantic relationships between fine-grained tasks is critical to the success of recent model agnostic approaches. These approaches often rely on meta-optimization to make a model robust to systematic task or domain shifts. However, in practice, the performance of these methods can suffer, when there are no coherent semantic relationships between the tasks (or domains). We present Invenio, a structured meta-learning algorithm to infer semantic similarities between a given set of tasks and to provide insights into the complexity of transferring knowledge between different tasks. In contrast to existing techniques such as Task2Vec and Taskonomy, which measure similarities between pre-trained models, our approach employs a novel self-supervised learning strategy to discover these relationships in the training loop and at the same time utilizes them to update task-specific models in the meta-update step. Using challenging task and domain databases, under few-shot learning settings, we show that Invenio can discover intricate dependencies between tasks or domains, and can provide significant gains over existing approaches in terms of generalization performance. The learned semantic structure between tasks/domains from Invenio is interpretable and can be used to construct meaningful priors for tasks or domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge