Intrinsically Motivated Reinforcement Learning based Recommendation with Counterfactual Data Augmentation

Paper and Code

Sep 17, 2022

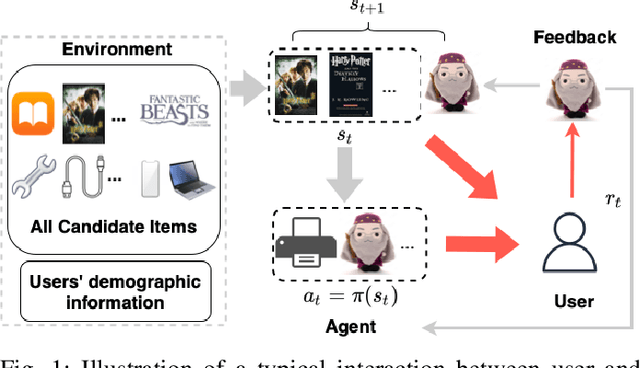

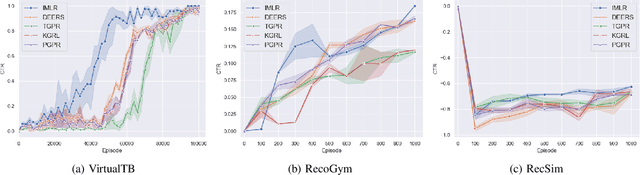

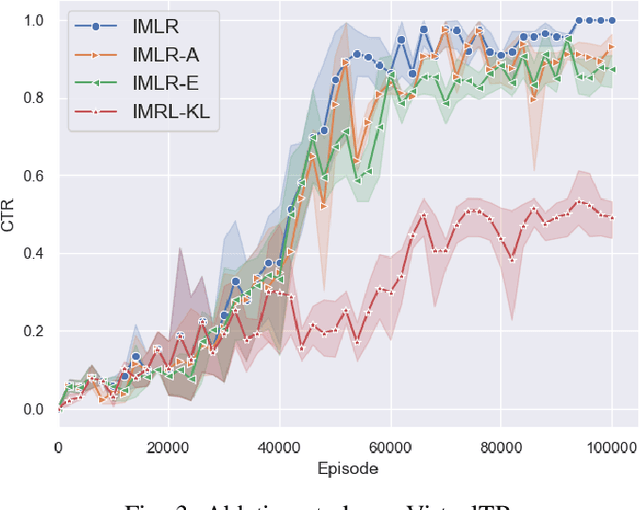

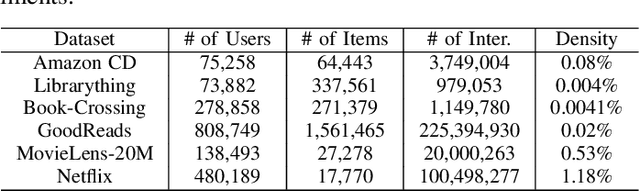

Deep reinforcement learning (DRL) has been proven its efficiency in capturing users' dynamic interests in recent literature. However, training a DRL agent is challenging, because of the sparse environment in recommender systems (RS), DRL agents could spend times either exploring informative user-item interaction trajectories or using existing trajectories for policy learning. It is also known as the exploration and exploitation trade-off which affects the recommendation performance significantly when the environment is sparse. It is more challenging to balance the exploration and exploitation in DRL RS where RS agent need to deeply explore the informative trajectories and exploit them efficiently in the context of recommender systems. As a step to address this issue, We design a novel intrinsically ,otivated reinforcement learning method to increase the capability of exploring informative interaction trajectories in the sparse environment, which are further enriched via a counterfactual augmentation strategy for more efficient exploitation. The extensive experiments on six offline datasets and three online simulation platforms demonstrate the superiority of our model to a set of existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge