Insensitive Stochastic Gradient Twin Support Vector Machine for Large Scale Problems

Paper and Code

Nov 23, 2017

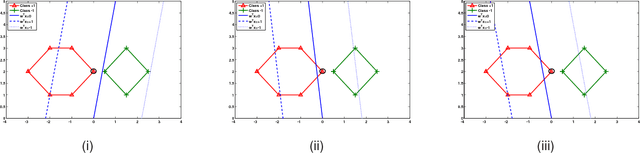

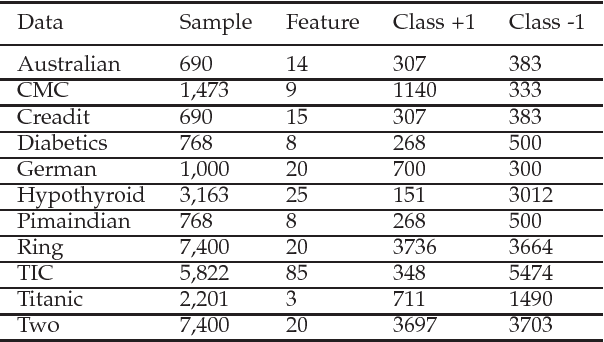

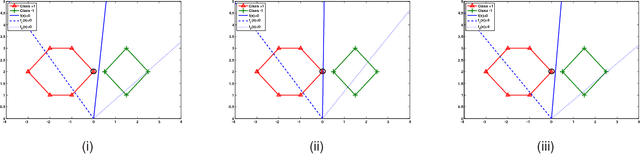

Stochastic gradient descent algorithm has been successfully applied on support vector machines (called PEGASOS) for many classification problems. In this paper, stochastic gradient descent algorithm is investigated to twin support vector machines for classification. Compared with PEGASOS, the proposed stochastic gradient twin support vector machines (SGTSVM) is insensitive on stochastic sampling for stochastic gradient descent algorithm. In theory, we prove the convergence of SGTSVM instead of almost sure convergence of PEGASOS. For uniformly sampling, the approximation between SGTSVM and twin support vector machines is also given, while PEGASOS only has an opportunity to obtain an approximation of support vector machines. In addition, the nonlinear SGTSVM is derived directly from its linear case. Experimental results on both artificial datasets and large scale problems show the stable performance of SGTSVM with a fast learning speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge