Image Quality Assessment for Omnidirectional Cross-reference Stitching

Paper and Code

Apr 23, 2019

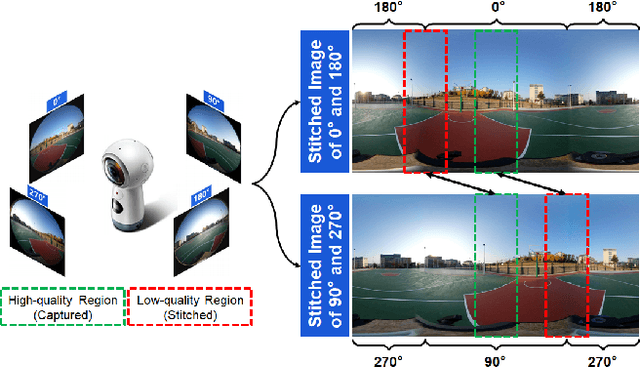

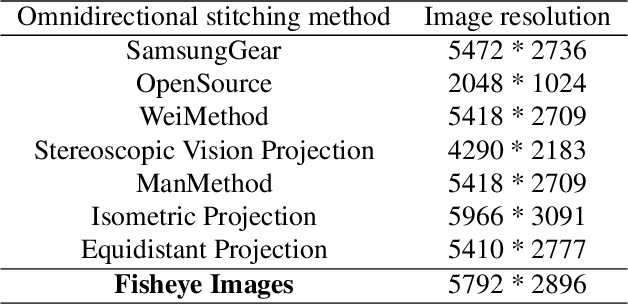

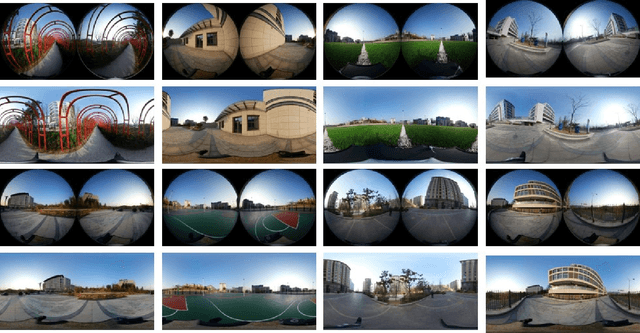

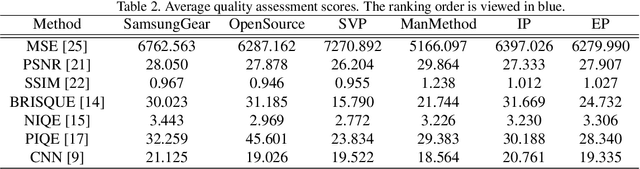

Along with the development of virtual reality (VR), omnidirectional images play an important role in producing multimedia content with immersive experience. However, despite various existing approaches for omnidirectional image stitching, how to quantitatively assess the quality of stitched images is still insufficiently explored. To address this problem, we establish a novel omnidirectional image dataset containing stitched images as well as dual-fisheye images captured from standard quarters of 0$^\circ$, 90$^\circ$, 180$^\circ$ and 270$^\circ$. In this manner, when evaluating the quality of an image stitched from a pair of fisheye images (e.g., 0$^\circ$ and 180$^\circ$), the other pair of fisheye images (e.g., 90$^\circ$ and 270$^\circ$) can be used as the cross-reference to provide ground-truth observations of the stitching regions. Based on this dataset, we further benchmark six widely used stitching models with seven evaluation metrics for IQA. To the best of our knowledge, it is the first dataset that focuses on assessing the stitching quality of omnidirectional images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge