ICDAR 2019 Competition on Image Retrieval for Historical Handwritten Documents

Paper and Code

Dec 08, 2019

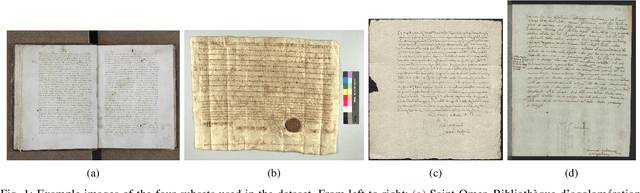

This competition investigates the performance of large-scale retrieval of historical document images based on writing style. Based on large image data sets provided by cultural heritage institutions and digital libraries, providing a total of 20 000 document images representing about 10 000 writers, divided in three types: writers of (i) manuscript books, (ii) letters, (iii) charters and legal documents. We focus on the task of automatic image retrieval to simulate common scenarios of humanities research, such as writer retrieval. The most teams submitted traditional methods not using deep learning techniques. The competition results show that a combination of methods is outperforming single methods. Furthermore, letters are much more difficult to retrieve than manuscripts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge