HVS-Inspired Signal Degradation Network for Just Noticeable Difference Estimation

Paper and Code

Aug 16, 2022

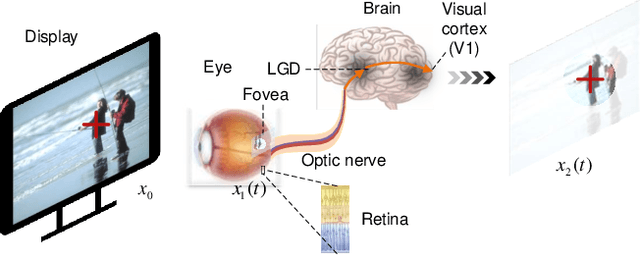

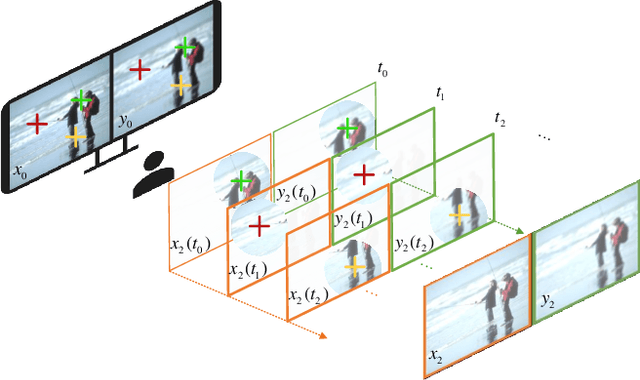

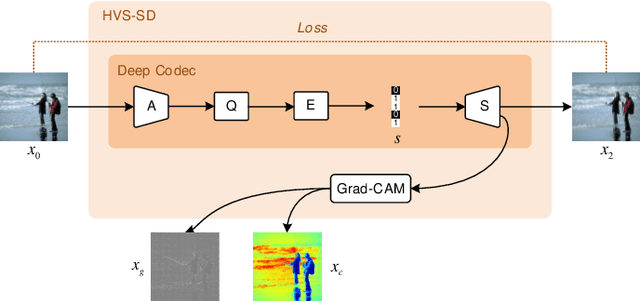

Significant improvement has been made on just noticeable difference (JND) modelling due to the development of deep neural networks, especially for the recently developed unsupervised-JND generation models. However, they have a major drawback that the generated JND is assessed in the real-world signal domain instead of in the perceptual domain in the human brain. There is an obvious difference when JND is assessed in such two domains since the visual signal in the real world is encoded before it is delivered into the brain with the human visual system (HVS). Hence, we propose an HVS-inspired signal degradation network for JND estimation. To achieve this, we carefully analyze the HVS perceptual process in JND subjective viewing to obtain relevant insights, and then design an HVS-inspired signal degradation (HVS-SD) network to represent the signal degradation in the HVS. On the one hand, the well learnt HVS-SD enables us to assess the JND in the perceptual domain. On the other hand, it provides more accurate prior information for better guiding JND generation. Additionally, considering the requirement that reasonable JND should not lead to visual attention shifting, a visual attention loss is proposed to control JND generation. Experimental results demonstrate that the proposed method achieves the SOTA performance for accurately estimating the redundancy of the HVS. Source code will be available at https://github.com/jianjin008/HVS-SD-JND.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge