Graph Enhanced BERT for Query Understanding

Paper and Code

Apr 03, 2022

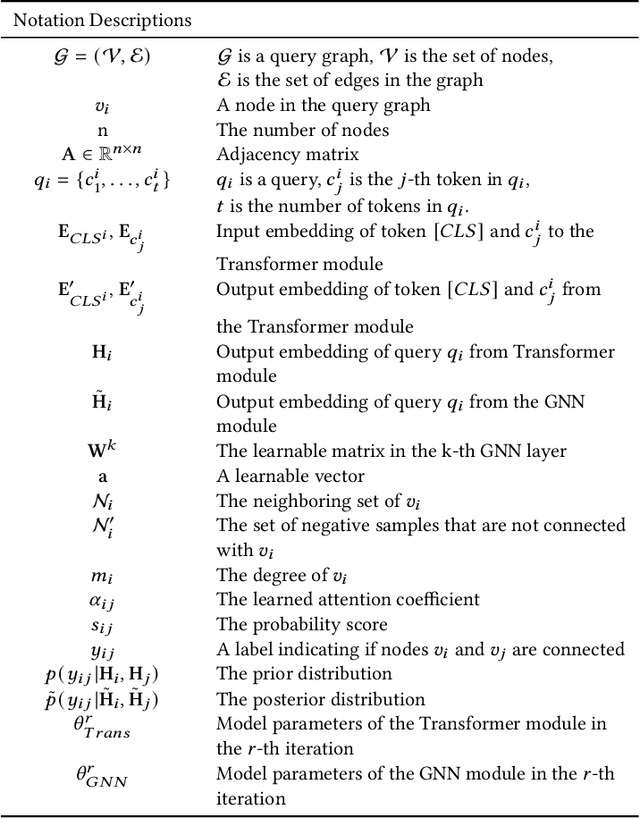

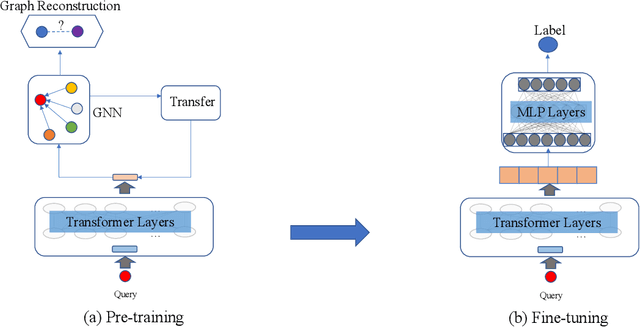

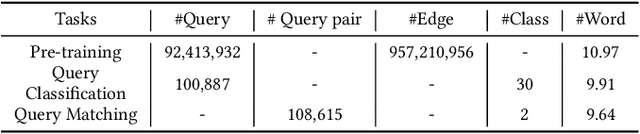

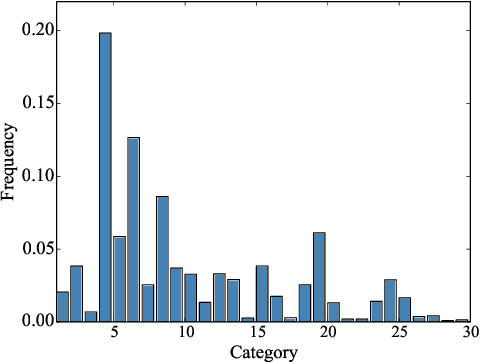

Query understanding plays a key role in exploring users' search intents and facilitating users to locate their most desired information. However, it is inherently challenging since it needs to capture semantic information from short and ambiguous queries and often requires massive task-specific labeled data. In recent years, pre-trained language models (PLMs) have advanced various natural language processing tasks because they can extract general semantic information from large-scale corpora. Therefore, there are unprecedented opportunities to adopt PLMs for query understanding. However, there is a gap between the goal of query understanding and existing pre-training strategies -- the goal of query understanding is to boost search performance while existing strategies rarely consider this goal. Thus, directly applying them to query understanding is sub-optimal. On the other hand, search logs contain user clicks between queries and urls that provide rich users' search behavioral information on queries beyond their content. Therefore, in this paper, we aim to fill this gap by exploring search logs. In particular, to incorporate search logs into pre-training, we first construct a query graph where nodes are queries and two queries are connected if they lead to clicks on the same urls. Then we propose a novel graph-enhanced pre-training framework, GE-BERT, which can leverage both query content and the query graph. In other words, GE-BERT can capture both the semantic information and the users' search behavioral information of queries. Extensive experiments on various query understanding tasks have demonstrated the effectiveness of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge