Globally Injective ReLU Networks

Paper and Code

Jun 15, 2020

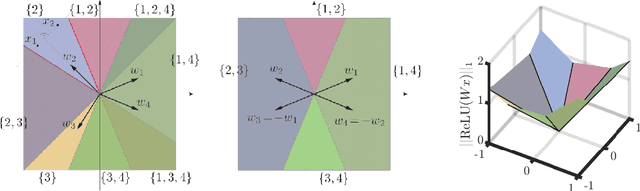

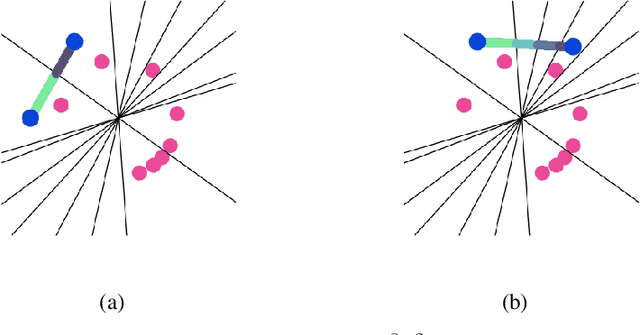

We study injective ReLU neural networks. Injectivity plays an important role in generative models where it facilitates inference; in inverse problems with generative priors it is a precursor to well posedness. We establish sharp conditions for injectivity of ReLU layers and networks, both fully connected and convolutional. We make no architectural assumptions beyond the ReLU activations so our results apply to a very general class of neural networks. We show through a layer-wise analysis that an expansivity factor of two is necessary for injectivity; we also show sufficiency by constructing weight matrices which guarantee injectivity. Further, we show that global injectivity with iid Gaussian matrices, a commonly used tractable model, requires considerably larger expansivity which might seem counterintuitive. We then derive the inverse Lipschitz constants and study the approximation-theoretic properties of injective neural networks. Using arguments from differential topology we prove that, under mild technical conditions, any Lipschitz map can be approximated by an injective neural network. This justifies the use of injective neural networks in problems which a priori do not require injectivity. Our results establish a theoretical basis for the study of nonlinear inverse and inference problems using neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge