GIST: Distributed Training for Large-Scale Graph Convolutional Networks

Paper and Code

Feb 20, 2021

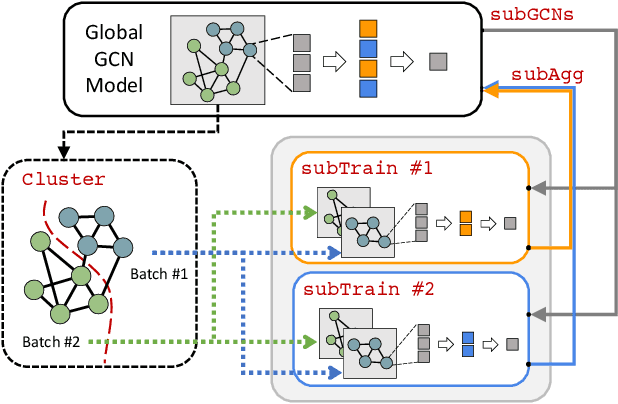

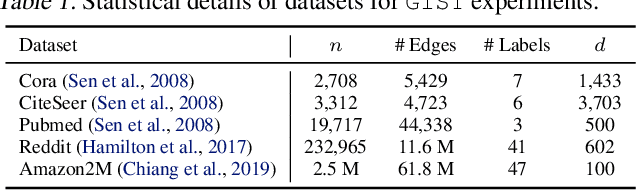

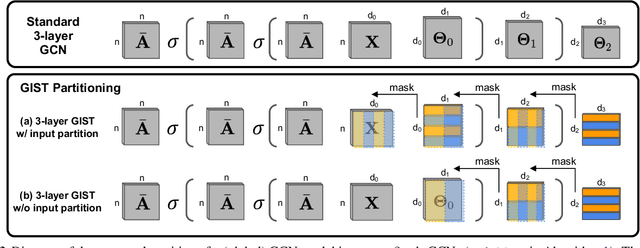

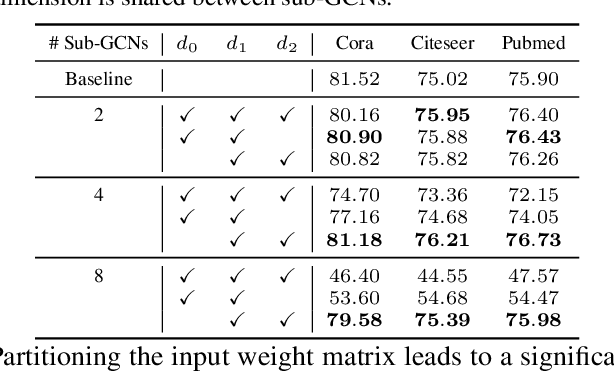

The graph convolutional network (GCN) is a go-to solution for machine learning on graphs, but its training is notoriously difficult to scale in terms of both the size of the graph and the number of model parameters. These limitations are in stark contrast to the increasing scale (in data size and model size) of experiments in deep learning research. In this work, we propose GIST, a novel distributed approach that enables efficient training of wide (overparameterized) GCNs on large graphs. GIST is a hybrid layer and graph sampling method, which disjointly partitions the global model into several, smaller sub-GCNs that are independently trained across multiple GPUs in parallel. This distributed framework improves model performance and significantly decreases wall-clock training time. GIST seeks to enable large-scale GCN experimentation with the goal of bridging the existing gap in scale between graph machine learning and deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge