Generalizing Neural Networks by Reflecting Deviating Data in Production

Paper and Code

Oct 06, 2021

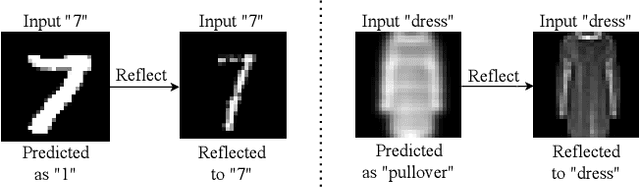

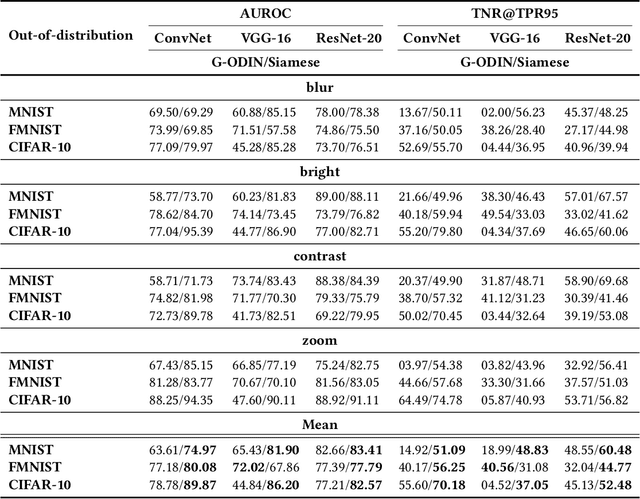

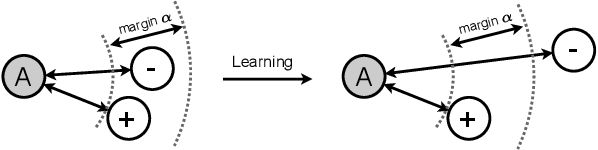

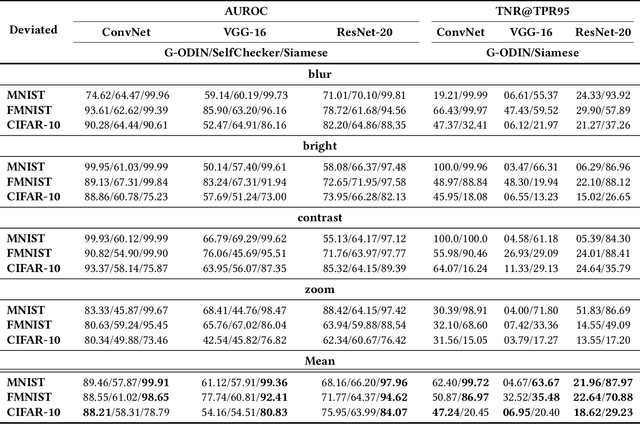

Trained with a sufficiently large training and testing dataset, Deep Neural Networks (DNNs) are expected to generalize. However, inputs may deviate from the training dataset distribution in real deployments. This is a fundamental issue with using a finite dataset. Even worse, real inputs may change over time from the expected distribution. Taken together, these issues may lead deployed DNNs to mis-predict in production. In this work, we present a runtime approach that mitigates DNN mis-predictions caused by the unexpected runtime inputs to the DNN. In contrast to previous work that considers the structure and parameters of the DNN itself, our approach treats the DNN as a blackbox and focuses on the inputs to the DNN. Our approach has two steps. First, it recognizes and distinguishes "unseen" semantically-preserving inputs. For this we use a distribution analyzer based on the distance metric learned by a Siamese network. Second, our approach transforms those unexpected inputs into inputs from the training set that are identified as having similar semantics. We call this process input reflection and formulate it as a search problem over the embedding space on the training set. This embedding space is learned by a Quadruplet network as an auxiliary model for the subject model to improve the generalization. We implemented a tool called InputReflector based on the above two-step approach and evaluated it with experiments on three DNN models trained on CIFAR-10, MNIST, and FMINST image datasets. The results show that InputReflector can effectively distinguish inputs that retain semantics of the distribution (e.g., blurred, brightened, contrasted, and zoomed images) and out-of-distribution inputs from normal inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge