Few-shot Segmentation with Optimal Transport Matching and Message Flow

Paper and Code

Aug 19, 2021

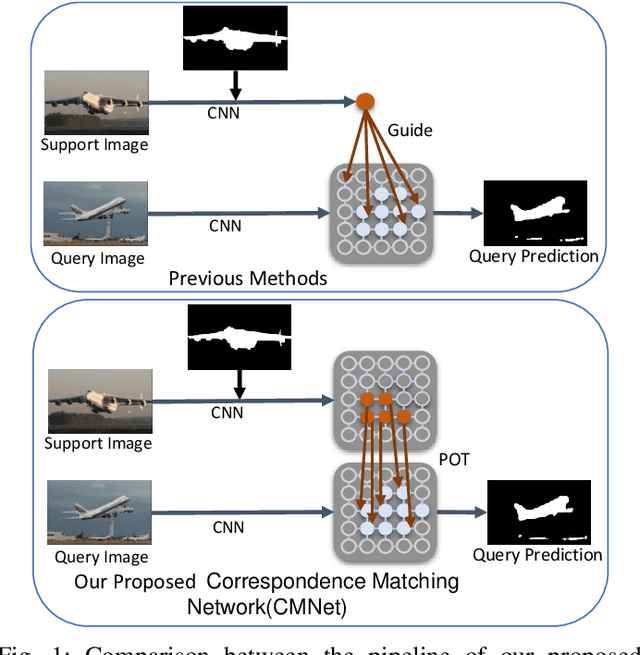

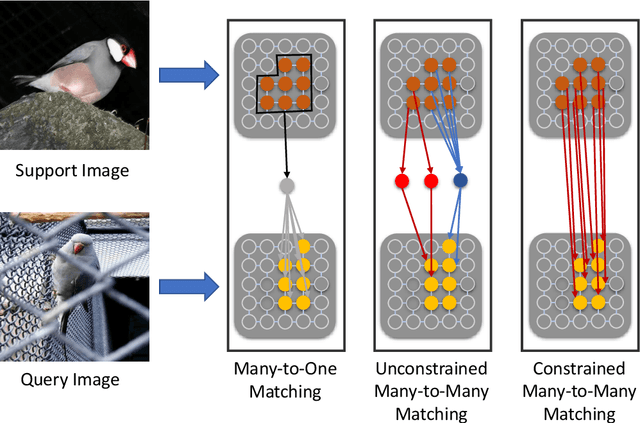

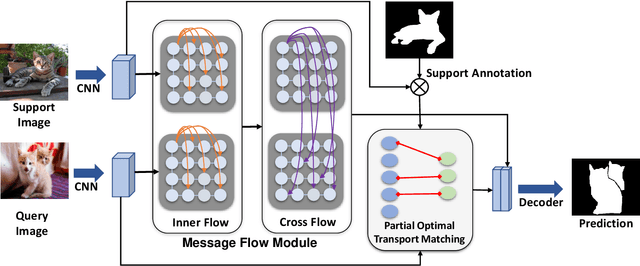

We address the challenging task of few-shot segmentation in this work. It is essential for few-shot semantic segmentation to fully utilize the support information. Previous methods typically adapt masked average pooling over the support feature to extract the support clues as a global vector, usually dominated by the salient part and loses some important clues. In this work, we argue that every support pixel's information is desired to be transferred to all query pixels and propose a Correspondence Matching Network (CMNet) with an Optimal Transport Matching module to mine out the correspondence between the query and support images. Besides, it is important to fully utilize both local and global information from the annotated support images. To this end, we propose a Message Flow module to propagate the message along the inner-flow within the same image and cross-flow between support and query images, which greatly help enhance the local feature representations. We further address the few-shot segmentation as a multi-task learning problem to alleviate the domain gap issue between different datasets. Experiments on PASCAL VOC 2012, MS COCO, and FSS-1000 datasets show that our network achieves new state-of-the-art few-shot segmentation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge