Few-shot Forgery Detection via Guided Adversarial Interpolation

Paper and Code

Apr 12, 2022

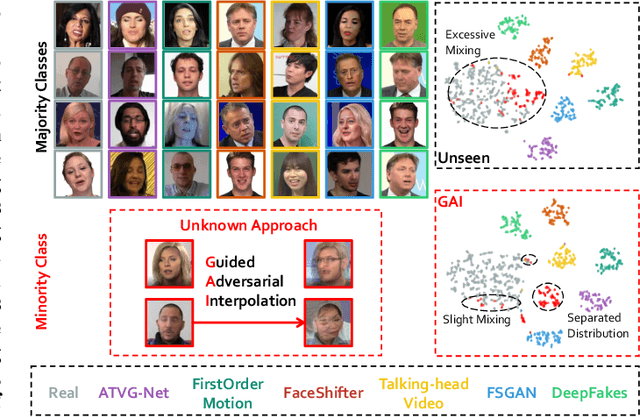

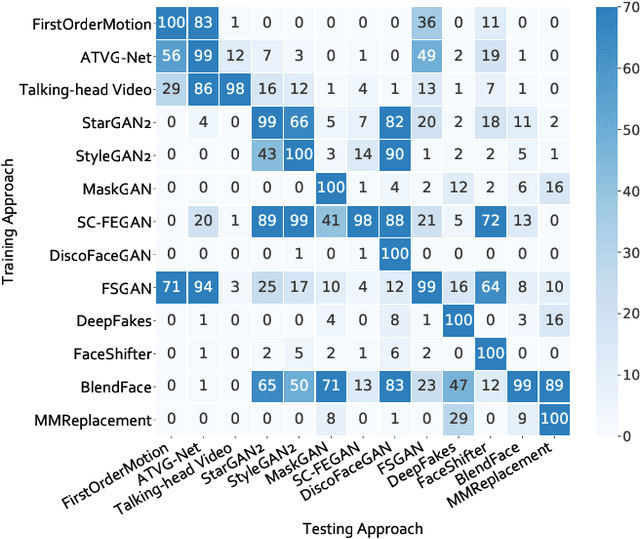

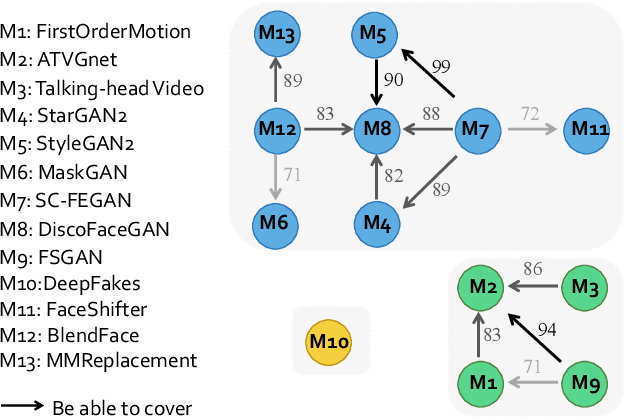

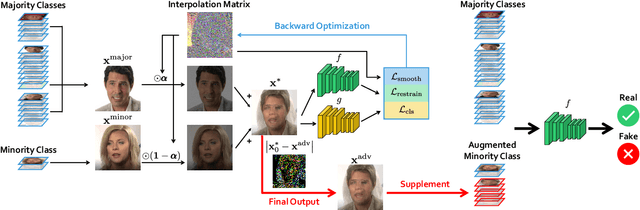

Realistic visual media synthesis is becoming a critical societal issue with the surge of face manipulation models; new forgery approaches emerge at an unprecedented pace. Unfortunately, existing forgery detection methods suffer significant performance drops when applied to novel forgery approaches. In this work, we address the few-shot forgery detection problem by designing a comprehensive benchmark based on coverage analysis among various forgery approaches, and proposing Guided Adversarial Interpolation (GAI). Our key insight is that there exist transferable distribution characteristics among different forgery approaches with the majority and minority classes. Specifically, we enhance the discriminative ability against novel forgery approaches via adversarially interpolating the artifacts of the minority samples to the majority samples under the guidance of a teacher network. Unlike the standard re-balancing method which usually results in over-fitting to minority classes, our method simultaneously takes account of the diversity of majority information as well as the significance of minority information. Extensive experiments demonstrate that our GAI achieves state-of-the-art performances on the established few-shot forgery detection benchmark. Notably, our method is also validated to be robust to choices of majority and minority forgery approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge