Federated and Generalized Person Re-identification through Domain and Feature Hallucinating

Paper and Code

Mar 08, 2022

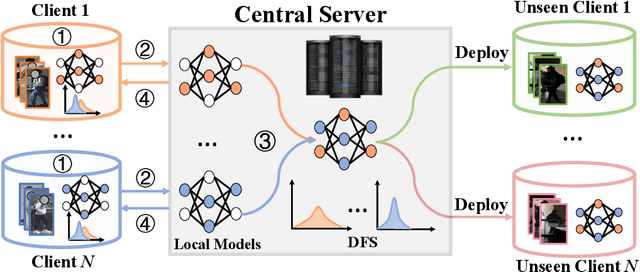

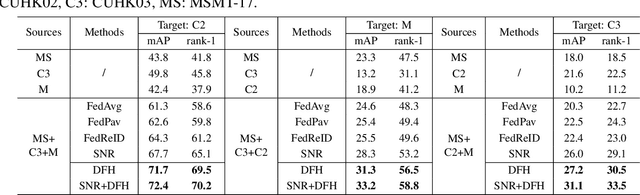

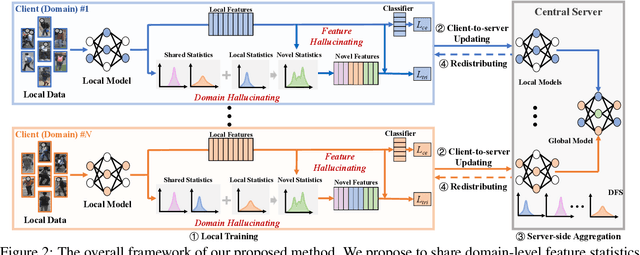

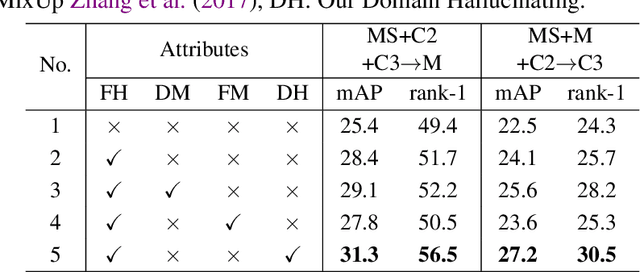

In this paper, we study the problem of federated domain generalization (FedDG) for person re-identification (re-ID), which aims to learn a generalized model with multiple decentralized labeled source domains. An empirical method (FedAvg) trains local models individually and averages them to obtain the global model for further local fine-tuning or deploying in unseen target domains. One drawback of FedAvg is neglecting the data distributions of other clients during local training, making the local model overfit local data and producing a poorly-generalized global model. To solve this problem, we propose a novel method, called "Domain and Feature Hallucinating (DFH)", to produce diverse features for learning generalized local and global models. Specifically, after each model aggregation process, we share the Domain-level Feature Statistics (DFS) among different clients without violating data privacy. During local training, the DFS are used to synthesize novel domain statistics with the proposed domain hallucinating, which is achieved by re-weighting DFS with random weights. Then, we propose feature hallucinating to diversify local features by scaling and shifting them to the distribution of the obtained novel domain. The synthesized novel features retain the original pair-wise similarities, enabling us to utilize them to optimize the model in a supervised manner. Extensive experiments verify that the proposed DFH can effectively improve the generalization ability of the global model. Our method achieves the state-of-the-art performance for FedDG on four large-scale re-ID benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge