Feature Erasing and Diffusion Network for Occluded Person Re-Identification

Paper and Code

Dec 16, 2021

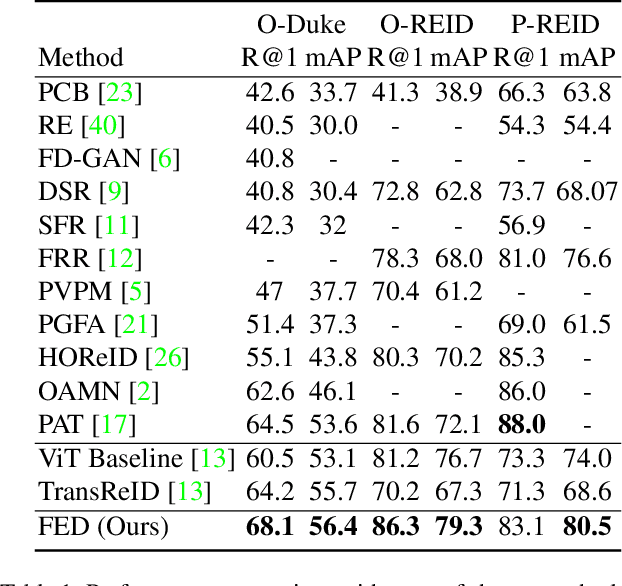

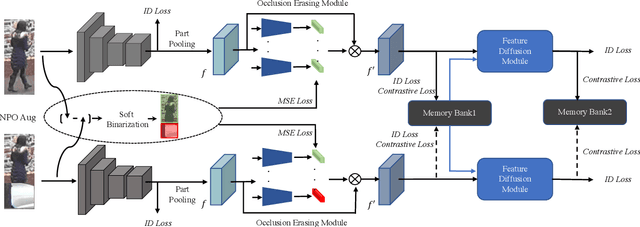

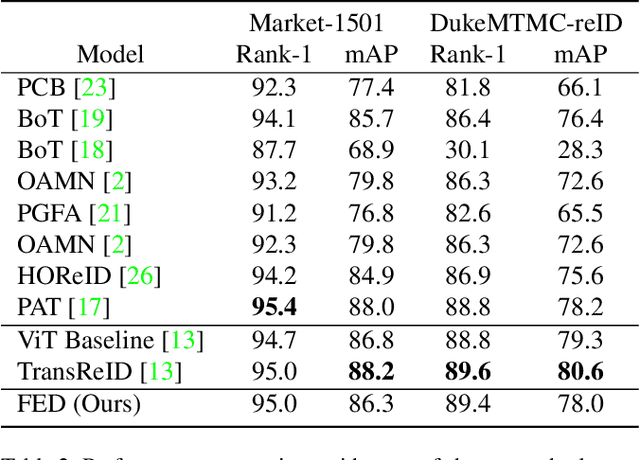

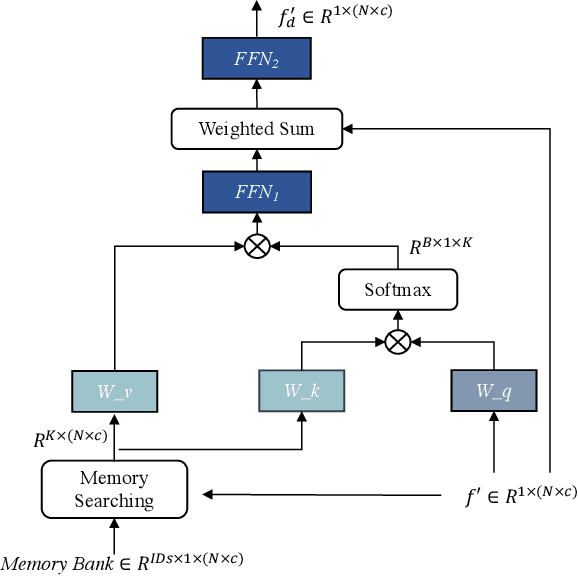

Occluded person re-identification (ReID) aims at matching occluded person images to holistic ones across different camera views. Target Pedestrians (TP) are usually disturbed by Non-Pedestrian Occlusions (NPO) and NonTarget Pedestrians (NTP). Previous methods mainly focus on increasing model's robustness against NPO while ignoring feature contamination from NTP. In this paper, we propose a novel Feature Erasing and Diffusion Network (FED) to simultaneously handle NPO and NTP. Specifically, NPO features are eliminated by our proposed Occlusion Erasing Module (OEM), aided by the NPO augmentation strategy which simulates NPO on holistic pedestrian images and generates precise occlusion masks. Subsequently, we Subsequently, we diffuse the pedestrian representations with other memorized features to synthesize NTP characteristics in the feature space which is achieved by a novel Feature Diffusion Module (FDM) through a learnable cross attention mechanism. With the guidance of the occlusion scores from OEM, the feature diffusion process is mainly conducted on visible body parts, which guarantees the quality of the synthesized NTP characteristics. By jointly optimizing OEM and FDM in our proposed FED network, we can greatly improve the model's perception ability towards TP and alleviate the influence of NPO and NTP. Furthermore, the proposed FDM only works as an auxiliary module for training and will be discarded in the inference phase, thus introducing little inference computational overhead. Experiments on occluded and holistic person ReID benchmarks demonstrate the superiority of FED over state-of-the-arts, where FED achieves 86.3% Rank-1 accuracy on Occluded-REID, surpassing others by at least 4.7%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge