Faster Algorithm and Sharper Analysis for Constrained Markov Decision Process

Paper and Code

Oct 20, 2021

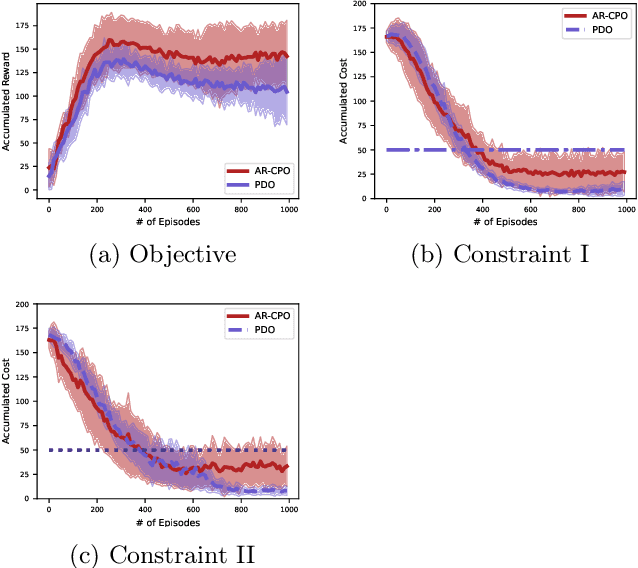

The problem of constrained Markov decision process (CMDP) is investigated, where an agent aims to maximize the expected accumulated discounted reward subject to multiple constraints on its utilities/costs. A new primal-dual approach is proposed with a novel integration of three ingredients: entropy regularized policy optimizer, dual variable regularizer, and Nesterov's accelerated gradient descent dual optimizer, all of which are critical to achieve a faster convergence. The finite-time error bound of the proposed approach is characterized. Despite the challenge of the nonconcave objective subject to nonconcave constraints, the proposed approach is shown to converge to the global optimum with a complexity of $\tilde{\mathcal O}(1/\epsilon)$ in terms of the optimality gap and the constraint violation, which improves the complexity of the existing primal-dual approach by a factor of $\mathcal O(1/\epsilon)$ \citep{ding2020natural,paternain2019constrained}. This is the first demonstration that nonconcave CMDP problems can attain the complexity lower bound of $\mathcal O(1/\epsilon)$ for convex optimization subject to convex constraints. Our primal-dual approach and non-asymptotic analysis are agnostic to the RL optimizer used, and thus are more flexible for practical applications. More generally, our approach also serves as the first algorithm that provably accelerates constrained nonconvex optimization with zero duality gap by exploiting the geometries such as the gradient dominance condition, for which the existing acceleration methods for constrained convex optimization are not applicable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge