Fairness in generative modeling

Paper and Code

Oct 06, 2022

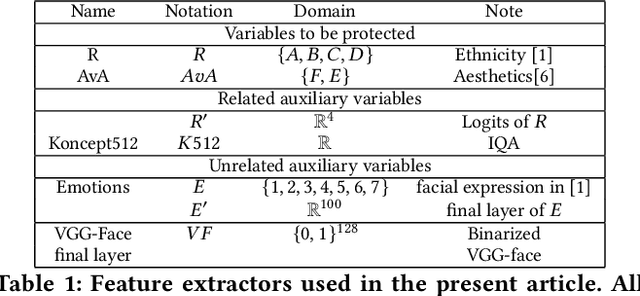

We design general-purpose algorithms for addressing fairness issues and mode collapse in generative modeling. More precisely, to design fair algorithms for as many sensitive variables as possible, including variables we might not be aware of, we assume no prior knowledge of sensitive variables: our algorithms use unsupervised fairness only, meaning no information related to the sensitive variables is used for our fairness-improving methods. All images of faces (even generated ones) have been removed to mitigate legal risks.

* GECCO '22: Genetic and Evolutionary Computation Conference, Jul

2022, Boston Massachusetts, France. pp.320-323

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge