Facial Depth and Normal Estimation using Single Dual-Pixel Camera

Paper and Code

Nov 25, 2021

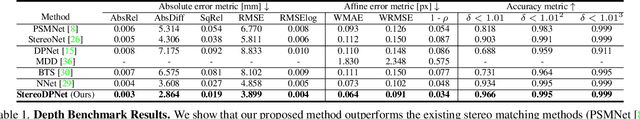

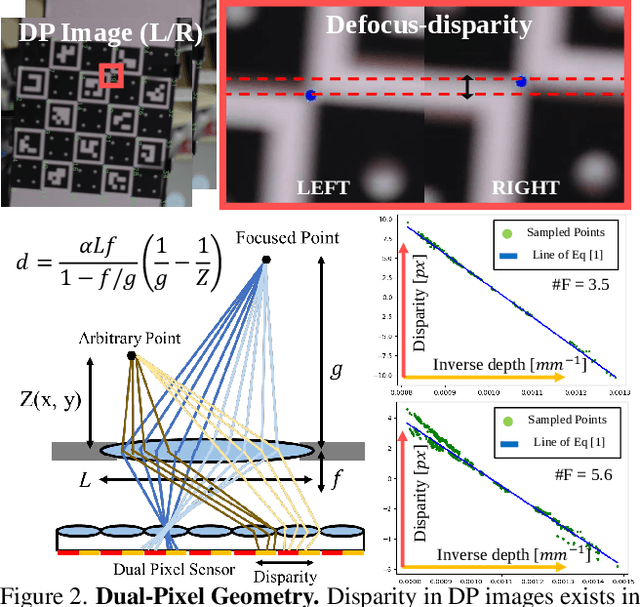

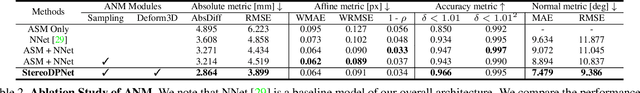

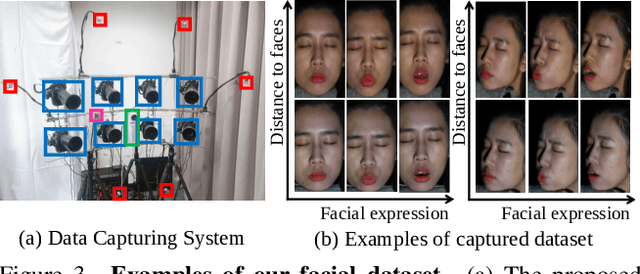

Many mobile manufacturers recently have adopted Dual-Pixel (DP) sensors in their flagship models for faster auto-focus and aesthetic image captures. Despite their advantages, research on their usage for 3D facial understanding has been limited due to the lack of datasets and algorithmic designs that exploit parallax in DP images. This is because the baseline of sub-aperture images is extremely narrow and parallax exists in the defocus blur region. In this paper, we introduce a DP-oriented Depth/Normal network that reconstructs the 3D facial geometry. For this purpose, we collect a DP facial data with more than 135K images for 101 persons captured with our multi-camera structured light systems. It contains the corresponding ground-truth 3D models including depth map and surface normal in metric scale. Our dataset allows the proposed matching network to be generalized for 3D facial depth/normal estimation. The proposed network consists of two novel modules: Adaptive Sampling Module and Adaptive Normal Module, which are specialized in handling the defocus blur in DP images. Finally, the proposed method achieves state-of-the-art performances over recent DP-based depth/normal estimation methods. We also demonstrate the applicability of the estimated depth/normal to face spoofing and relighting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge