FaceSigns: Semi-Fragile Neural Watermarks for Media Authentication and Countering Deepfakes

Paper and Code

Apr 05, 2022

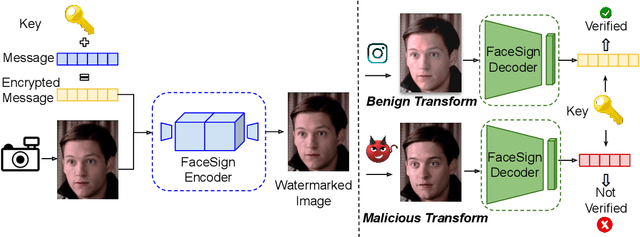

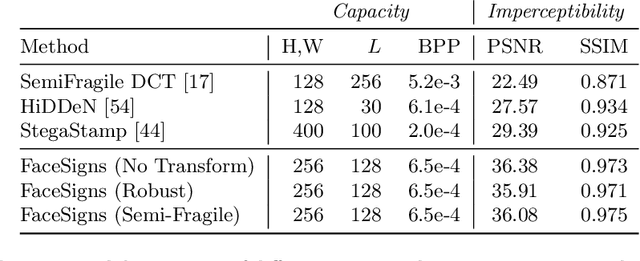

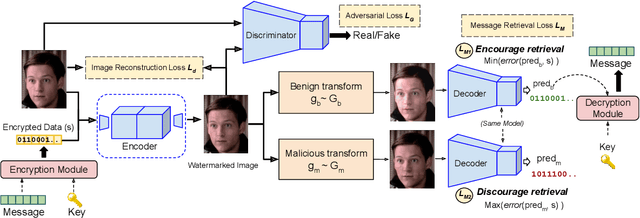

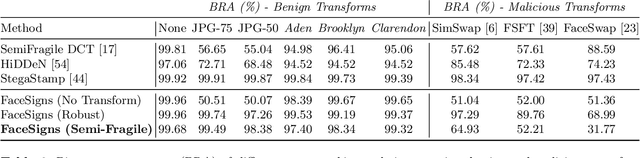

Deepfakes and manipulated media are becoming a prominent threat due to the recent advances in realistic image and video synthesis techniques. There have been several attempts at combating Deepfakes using machine learning classifiers. However, such classifiers do not generalize well to black-box image synthesis techniques and have been shown to be vulnerable to adversarial examples. To address these challenges, we introduce a deep learning based semi-fragile watermarking technique that allows media authentication by verifying an invisible secret message embedded in the image pixels. Instead of identifying and detecting fake media using visual artifacts, we propose to proactively embed a semi-fragile watermark into a real image so that we can prove its authenticity when needed. Our watermarking framework is designed to be fragile to facial manipulations or tampering while being robust to benign image-processing operations such as image compression, scaling, saturation, contrast adjustments etc. This allows images shared over the internet to retain the verifiable watermark as long as face-swapping or any other Deepfake modification technique is not applied. We demonstrate that FaceSigns can embed a 128 bit secret as an imperceptible image watermark that can be recovered with a high bit recovery accuracy at several compression levels, while being non-recoverable when unseen Deepfake manipulations are applied. For a set of unseen benign and Deepfake manipulations studied in our work, FaceSigns can reliably detect manipulated content with an AUC score of 0.996 which is significantly higher than prior image watermarking and steganography techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge