Every Annotation Counts: Multi-label Deep Supervision for Medical Image Segmentation

Paper and Code

Apr 27, 2021

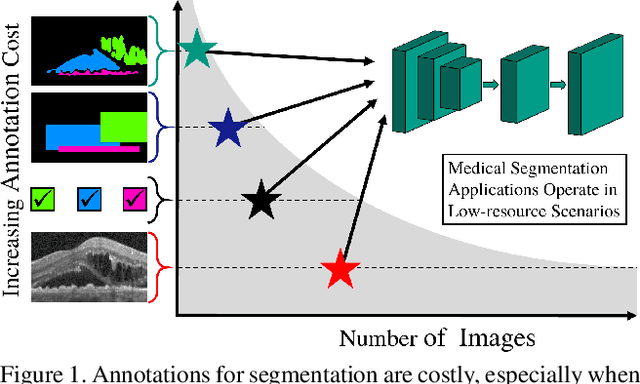

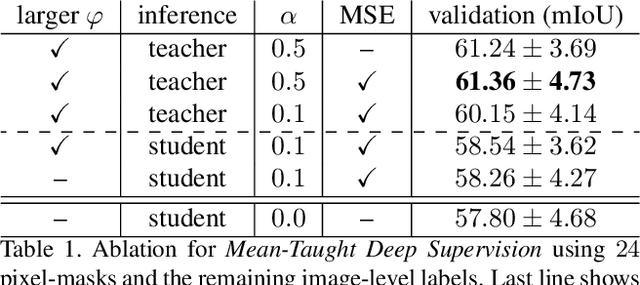

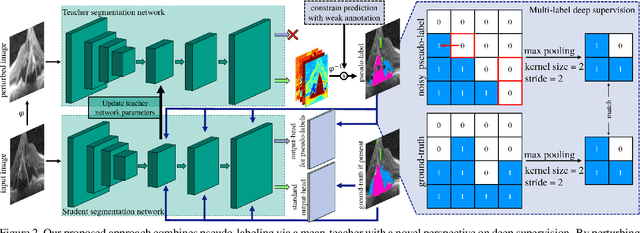

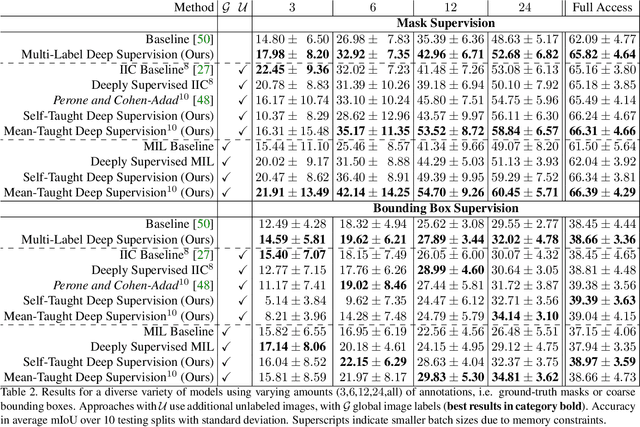

Pixel-wise segmentation is one of the most data and annotation hungry tasks in our field. Providing representative and accurate annotations is often mission-critical especially for challenging medical applications. In this paper, we propose a semi-weakly supervised segmentation algorithm to overcome this barrier. Our approach is based on a new formulation of deep supervision and student-teacher model and allows for easy integration of different supervision signals. In contrast to previous work, we show that care has to be taken how deep supervision is integrated in lower layers and we present multi-label deep supervision as the most important secret ingredient for success. With our novel training regime for segmentation that flexibly makes use of images that are either fully labeled, marked with bounding boxes, just global labels, or not at all, we are able to cut the requirement for expensive labels by 94.22% - narrowing the gap to the best fully supervised baseline to only 5% mean IoU. Our approach is validated by extensive experiments on retinal fluid segmentation and we provide an in-depth analysis of the anticipated effect each annotation type can have in boosting segmentation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge