End-to-End Hyperspectral-Depth Imaging with Learned Diffractive Optics

Paper and Code

Sep 01, 2020

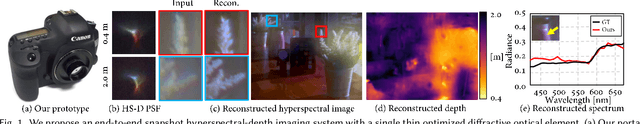

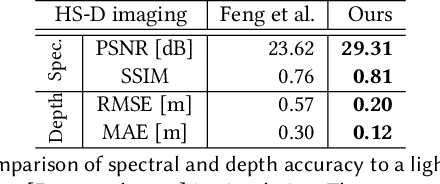

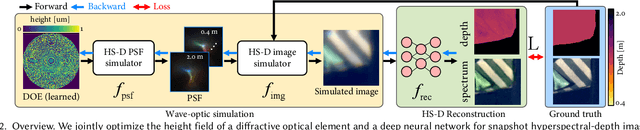

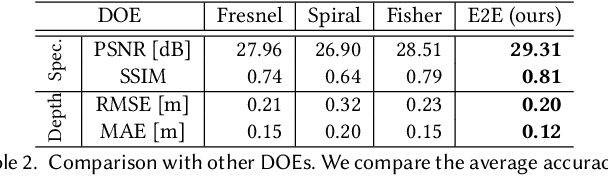

To extend the capabilities of spectral imaging, hyperspectral and depth imaging have been combined to capture the higher-dimensional visual information. However, the form factor of the combined imaging systems increases, limiting the applicability of this new technology. In this work, we propose a monocular imaging system for simultaneously capturing hyperspectral-depth (HS-D) scene information with an optimized diffractive optical element (DOE). In the training phase, this DOE is optimized jointly with a convolutional neural network to estimate HS-D data from a snapshot input. To study natural image statistics of this high-dimensional visual data and to enable such a machine learning-based DOE training procedure, we record two HS-D datasets. One is used for end-to-end optimization in deep optical HS-D imaging, and the other is used for enhancing reconstruction performance with a real-DOE prototype. The optimized DOE is fabricated with a grayscale lithography process and inserted into a portable HS-D camera prototype, which is shown to robustly capture HS-D information. In extensive evaluations, we demonstrate that our deep optical imaging system achieves state-of-the-art results for HS-D imaging and that the optimized DOE outperforms alternative optical designs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge