Efficient Reinforcement Learning with a Mind-Game for Full-Length StarCraft II

Paper and Code

Mar 02, 2019

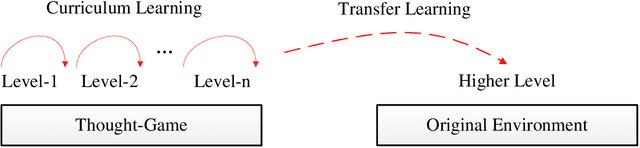

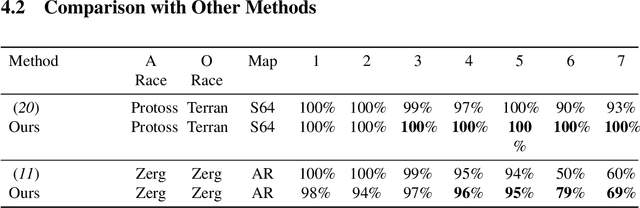

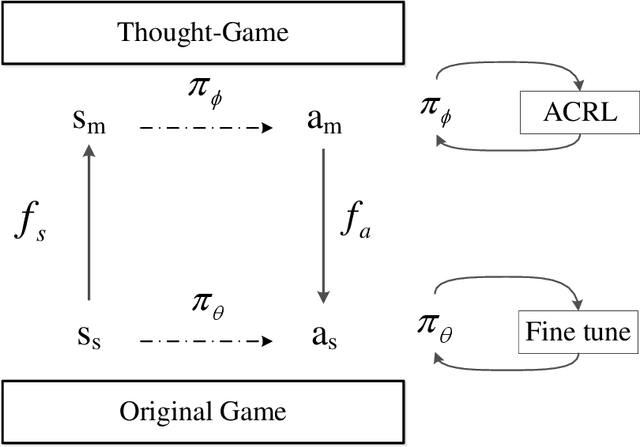

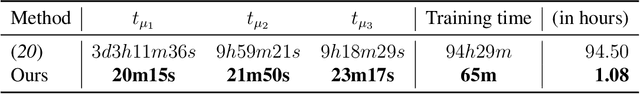

StarCraft II provides an extremely challenging platform for reinforcement learning due to its huge state-space and game length. The previous fastest method requires days to train a full-length game policy in a single commercial machine. In this paper, we introduce the mind-game to facilitate the reinforcement learning, which is an abstract task model. With the mind-game, the policy is firstly trained in the mind-game fastly and is then mapped to the real game for the second phase training. In our experiments, the trained agent can achieve a 100% win-rate on the map Simple64 against the most difficult non-cheating built-in bot (level-7), and the training is 100 times faster than the previous ones under the same computational resource. To test the generalization performance of the agent, a Golden level of StarCraft II Ladder human player has competed with the agent. With restricted strategy, the agent wins the human player by 4 out of 5 games. The mind-game approach might shed some light for further studies of efficient reinforcement learning. The codes are publicly available (https://github.com/mindgameSC2/mind-SC2).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge