Efficient Non-Exemplar Class-Incremental Learning with Retrospective Feature Synthesis

Paper and Code

Nov 03, 2024

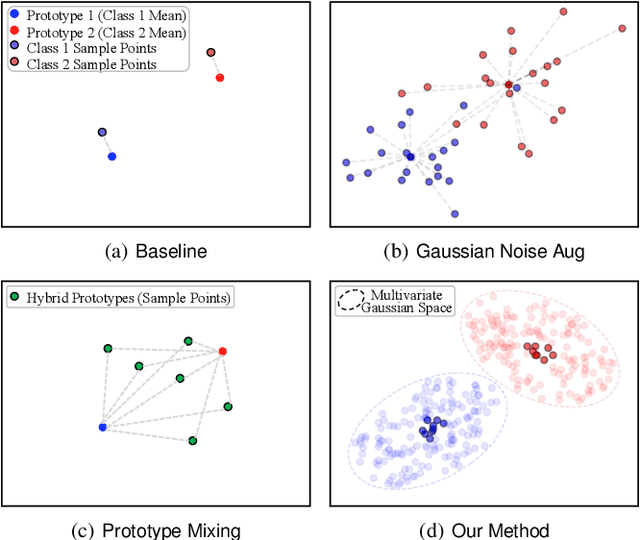

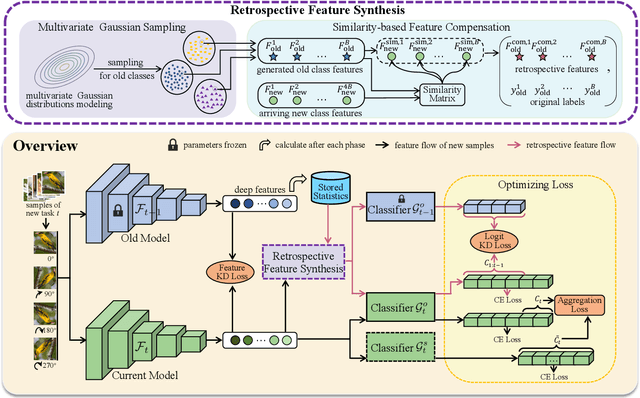

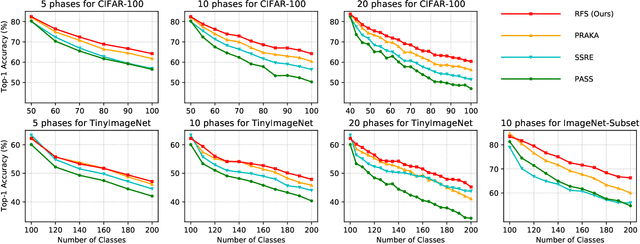

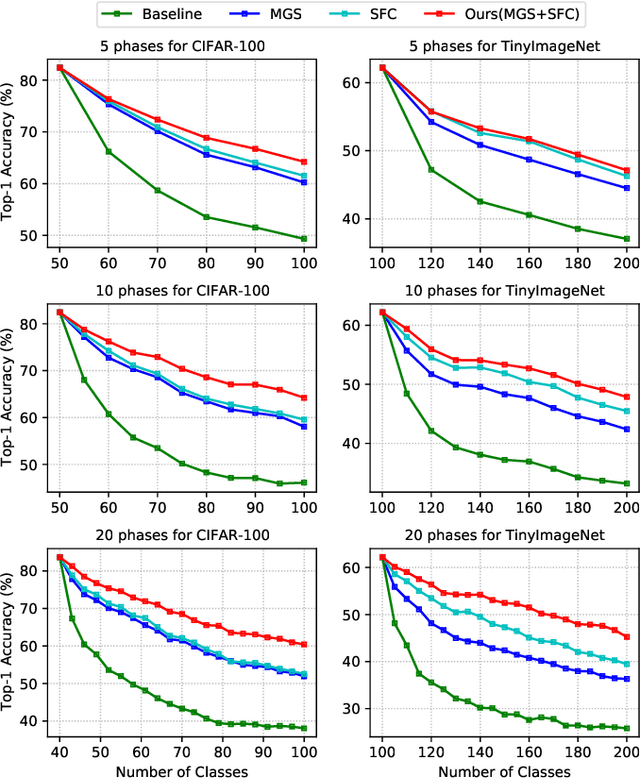

Despite the outstanding performance in many individual tasks, deep neural networks suffer from catastrophic forgetting when learning from continuous data streams in real-world scenarios. Current Non-Exemplar Class-Incremental Learning (NECIL) methods mitigate forgetting by storing a single prototype per class, which serves to inject previous information when sequentially learning new classes. However, these stored prototypes or their augmented variants often fail to simultaneously capture spatial distribution diversity and precision needed for representing old classes. Moreover, as the model acquires new knowledge, these prototypes gradually become outdated, making them less effective. To overcome these limitations, we propose a more efficient NECIL method that replaces prototypes with synthesized retrospective features for old classes. Specifically, we model each old class's feature space using a multivariate Gaussian distribution and generate deep representations by sampling from high-likelihood regions. Additionally, we introduce a similarity-based feature compensation mechanism that integrates generated old class features with similar new class features to synthesize robust retrospective representations. These retrospective features are then incorporated into our incremental learning framework to preserve the decision boundaries of previous classes while learning new ones. Extensive experiments on CIFAR-100, TinyImageNet, and ImageNet-Subset demonstrate that our method significantly improves the efficiency of non-exemplar class-incremental learning and achieves state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge