Dynamic Sampling Convolutional Neural Networks

Paper and Code

Mar 22, 2018

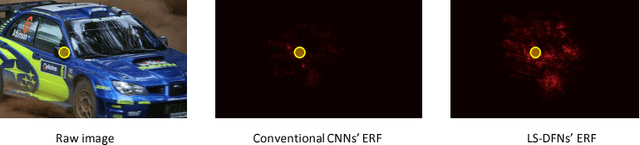

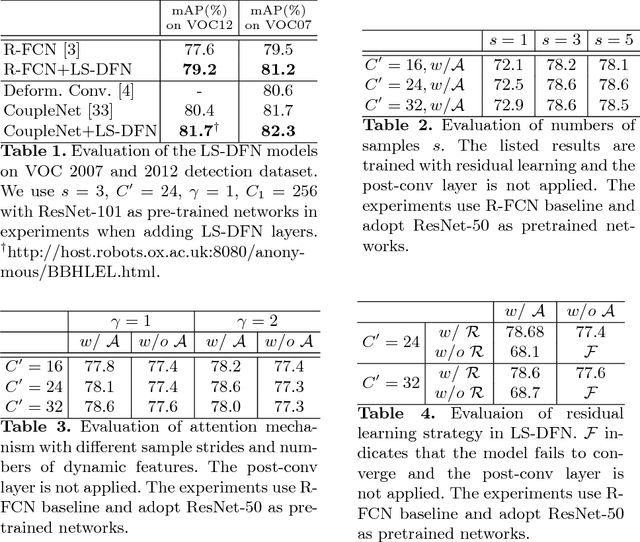

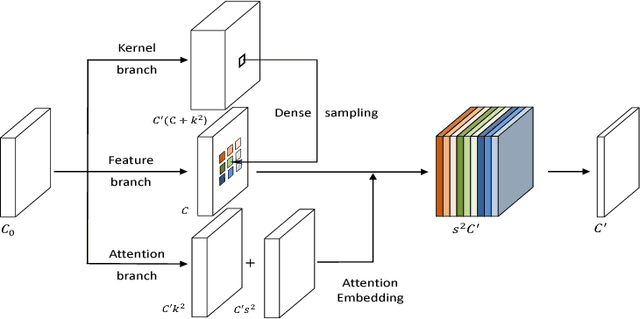

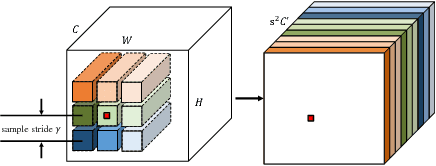

We present Dynamic Sampling Convolutional Neural Networks (DSCNN), where the position-specific kernels learn from not only the current position but also multiple sampled neighbour regions. During sampling, residual learning is introduced to ease training and an attention mechanism is applied to fuse features from different samples. And the kernels are further factorized to reduce parameters. The multiple sampling strategy enlarges the effective receptive fields significantly without requiring more parameters. While DSCNNs inherit the advantages of DFN, namely avoiding feature map blurring by position-specific kernels while keeping translation invariance, it also efficiently alleviates the overfitting issue caused by much more parameters than normal CNNs. Our model is efficient and can be trained end-to-end via standard back-propagation. We demonstrate the merits of our DSCNNs on both sparse and dense prediction tasks involving object detection and flow estimation. Our results show that DSCNNs enjoy stronger recognition abilities and achieve 81.7% in VOC2012 detection dataset. Also, DSCNNs obtain much sharper responses in flow estimation on FlyingChairs dataset compared to multiple FlowNet models' baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge