Dynamic Processing Neural Network Architecture For Hearing Loss Compensation

Paper and Code

Oct 25, 2023

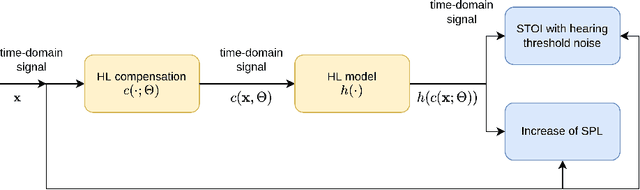

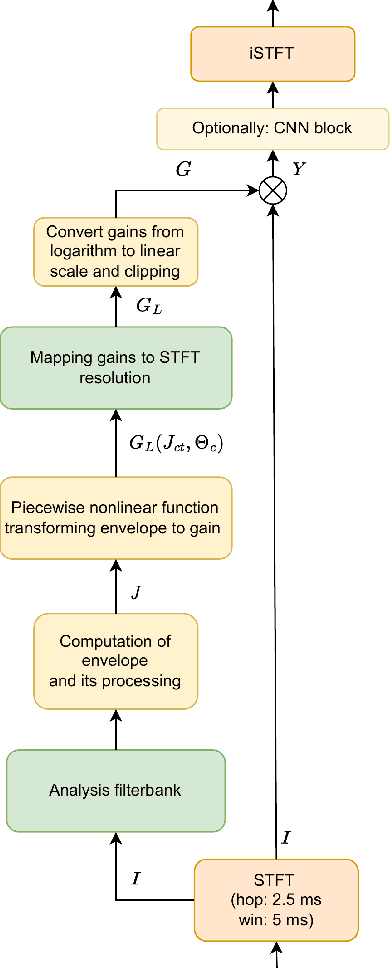

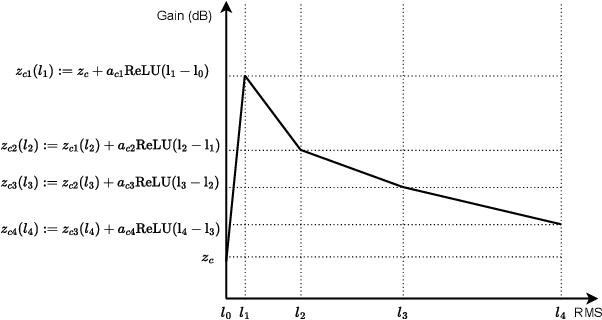

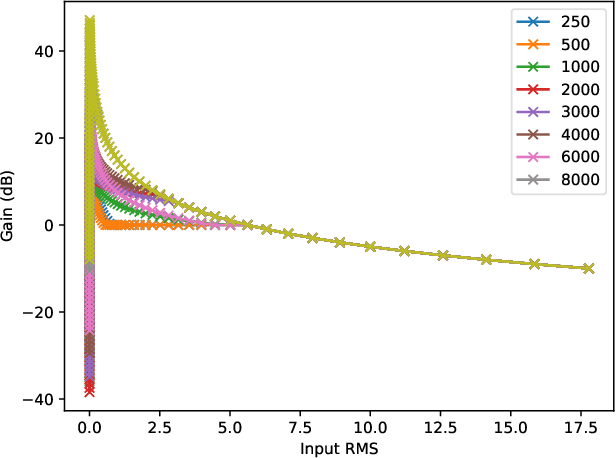

This paper proposes neural networks for compensating sensorineural hearing loss. The aim of the hearing loss compensation task is to transform a speech signal to increase speech intelligibility after further processing by a person with a hearing impairment, which is modeled by a hearing loss model. We propose an interpretable model called dynamic processing network, which has a structure similar to band-wise dynamic compressor. The network is differentiable, and therefore allows to learn its parameters to maximize speech intelligibility. More generic models based on convolutional layers were tested as well. The performance of the tested architectures was assessed using spectro-temporal objective index (STOI) with hearing-threshold noise and hearing aid speech intelligibility (HASPI) metrics. The dynamic processing network gave a significant improvement of STOI and HASPI in comparison to popular compressive gain prescription rule Camfit. A large enough convolutional network could outperform the interpretable model with the cost of larger computational load. Finally, a combination of the dynamic processing network with convolutional neural network gave the best results in terms of STOI and HASPI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge