Dynamic Allocation Hypernetwork with Adaptive Model Recalibration for Federated Continual Learning

Paper and Code

Mar 25, 2025

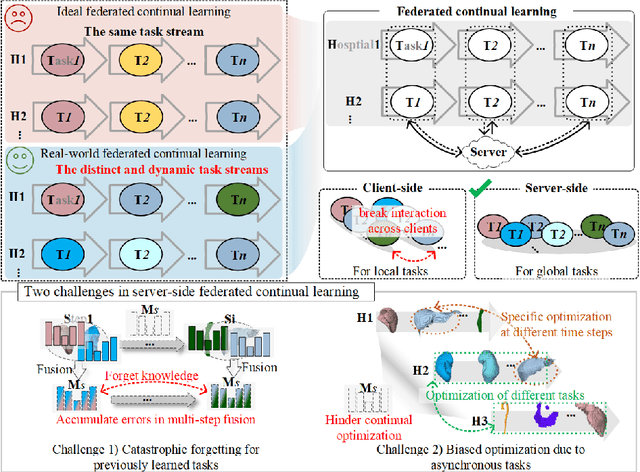

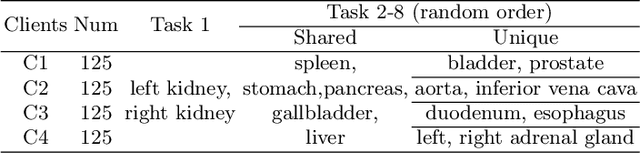

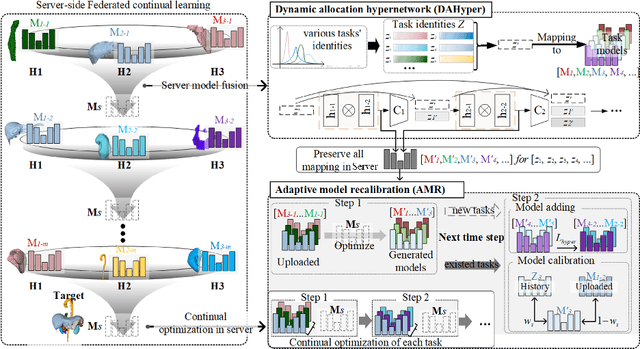

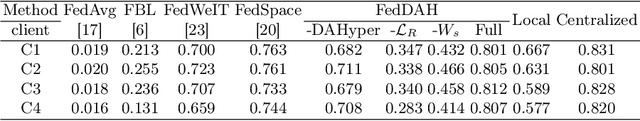

Federated continual learning (FCL) offers an emerging pattern to facilitate the applicability of federated learning (FL) in real-world scenarios, where tasks evolve dynamically and asynchronously across clients, especially in medical scenario. Existing server-side FCL methods in nature domain construct a continually learnable server model by client aggregation on all-involved tasks. However, they are challenged by: (1) Catastrophic forgetting for previously learned tasks, leading to error accumulation in server model, making it difficult to sustain comprehensive knowledge across all tasks. (2) Biased optimization due to asynchronous tasks handled across different clients, leading to the collision of optimization targets of different clients at the same time steps. In this work, we take the first step to propose a novel server-side FCL pattern in medical domain, Dynamic Allocation Hypernetwork with adaptive model recalibration (FedDAH). It is to facilitate collaborative learning under the distinct and dynamic task streams across clients. To alleviate the catastrophic forgetting, we propose a dynamic allocation hypernetwork (DAHyper) where a continually updated hypernetwork is designed to manage the mapping between task identities and their associated model parameters, enabling the dynamic allocation of the model across clients. For the biased optimization, we introduce a novel adaptive model recalibration (AMR) to incorporate the candidate changes of historical models into current server updates, and assign weights to identical tasks across different time steps based on the similarity for continual optimization. Extensive experiments on the AMOS dataset demonstrate the superiority of our FedDAH to other FCL methods on sites with different task streams. The code is available:https://github.com/jinlab-imvr/FedDAH.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge