DTVNet: Dynamic Time-lapse Video Generation via Single Still Image

Paper and Code

Aug 11, 2020

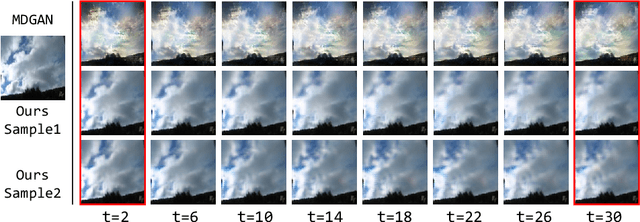

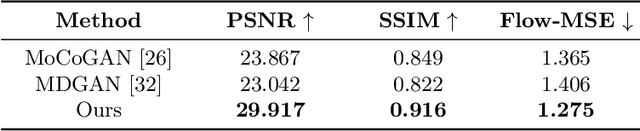

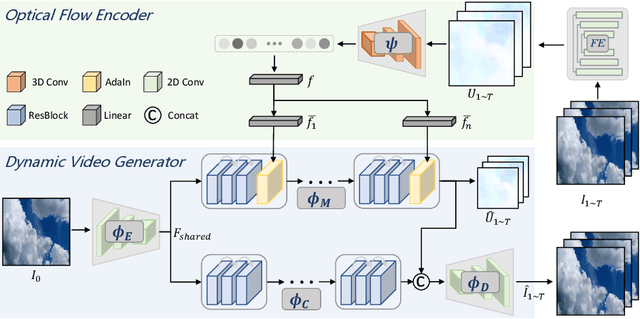

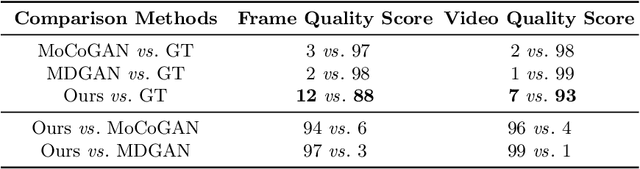

This paper presents a novel end-to-end dynamic time-lapse video generation framework, named DTVNet, to generate diversified time-lapse videos from a single landscape image, which are conditioned on normalized motion vectors. The proposed DTVNet consists of two submodules: \emph{Optical Flow Encoder} (OFE) and \emph{Dynamic Video Generator} (DVG). The OFE maps a sequence of optical flow maps to a \emph{normalized motion vector} that encodes the motion information inside the generated video. The DVG contains motion and content streams that learn from the motion vector and the single image respectively, as well as an encoder and a decoder to learn shared content features and construct video frames with corresponding motion respectively. Specifically, the \emph{motion stream} introduces multiple \emph{adaptive instance normalization} (AdaIN) layers to integrate multi-level motion information that are processed by linear layers. In the testing stage, videos with the same content but various motion information can be generated by different \emph{normalized motion vectors} based on only one input image. We further conduct experiments on Sky Time-lapse dataset, and the results demonstrate the superiority of our approach over the state-of-the-art methods for generating high-quality and dynamic videos, as well as the variety for generating videos with various motion information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge