DROCC: Deep Robust One-Class Classification

Paper and Code

Feb 28, 2020

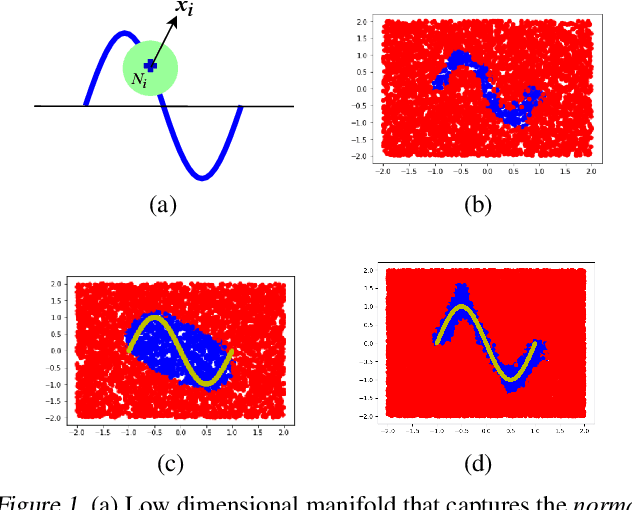

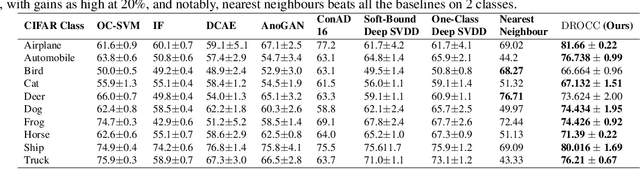

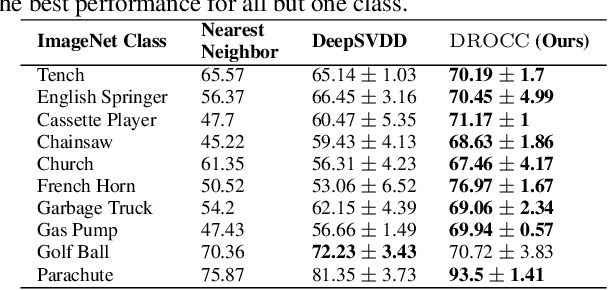

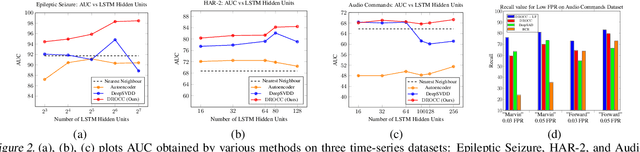

Classical approaches for one-class problems such as one-class SVM (Scholkopf et al., 1999) and isolation forest (Liu et al., 2008) require careful feature engineering when applied to structured domains like images. To alleviate this concern, state-of-the-art methods like DeepSVDD (Ruff et al., 2018) consider the natural alternative of minimizing a classical one-class loss applied to the learned final layer representations. However, such an approach suffers from the fundamental drawback that a representation that simply collapses all the inputs minimizes the one class loss; heuristics to mitigate collapsed representations provide limited benefits. In this work, we propose Deep Robust One Class Classification (DROCC) method that is robust to such a collapse by training the network to distinguish the training points from their perturbations, generated adversarially. DROCC is motivated by the assumption that the interesting class lies on a locally linear low dimensional manifold. Empirical evaluation demonstrates DROCC's effectiveness on two different one-class problem settings and on a range of real-world datasets across different domains - images(CIFAR and ImageNet), audio and timeseries, offering up to 20% increase in accuracy over the state-of-the-art in anomaly detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge