Does image resolution impact chest X-ray based fine-grained Tuberculosis-consistent lesion segmentation?

Paper and Code

Jan 10, 2023

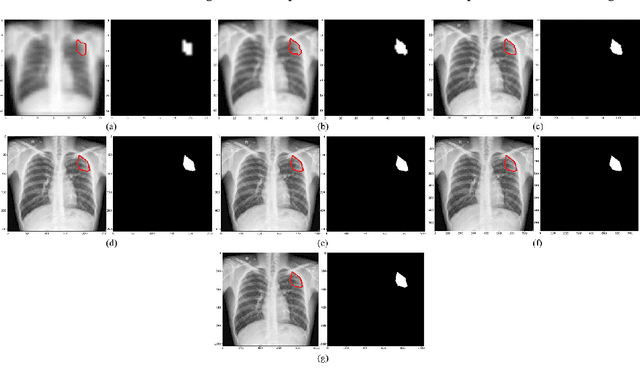

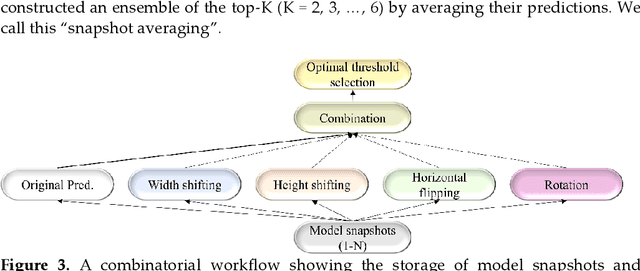

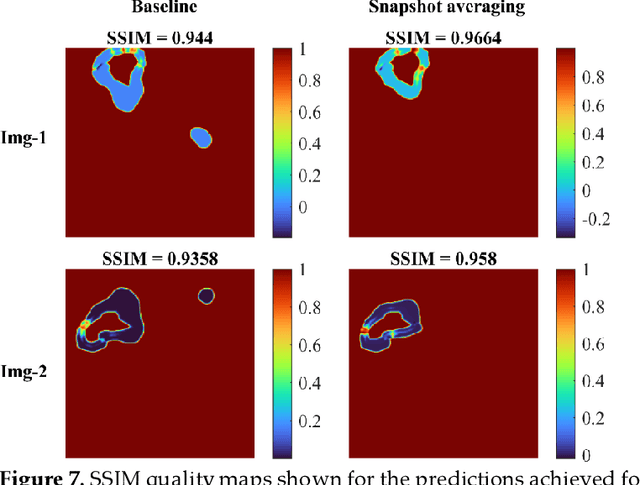

Deep learning (DL) models are becoming state-of-the-art in segmenting anatomical and disease regions of interest (ROIs) in medical images, particularly chest X-rays (CXRs). However, these models are reportedly trained on reduced image resolutions citing reasons for the lack of computational resources. Literature is sparse considering identifying the optimal image resolution to train these models for the task under study, particularly considering segmentation of Tuberculosis (TB)-consistent lesions in CXRs. In this study, we used the (i) Shenzhen TB CXR dataset, investigated performance gains achieved through training an Inception-V3-based UNet model using various image/mask resolutions with/without lung ROI cropping and aspect ratio adjustments, and (ii) identified the optimal image resolution through extensive empirical evaluations to improve TB-consistent lesion segmentation performance. We proposed a combinatorial approach consisting of storing model snapshots, optimizing test-time augmentation (TTA) methods, and selecting the optimal segmentation threshold to further improve performance at the optimal resolution. We emphasize that (i) higher image resolutions are not always necessary and (ii) identifying the optimal image resolution is indispensable to achieve superior performance for the task under study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge