DMN4: Few-shot Learning via Discriminative Mutual Nearest Neighbor Neural Network

Paper and Code

Mar 15, 2021

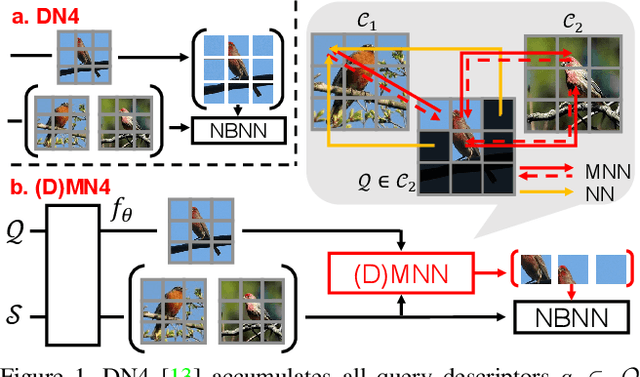

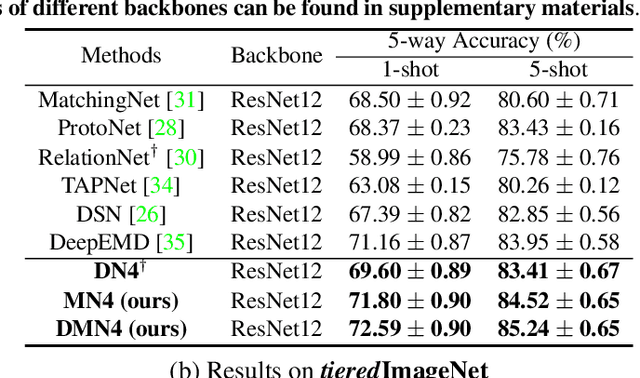

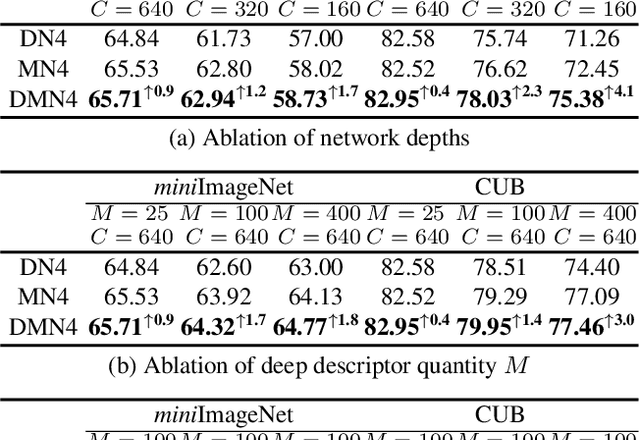

Few-shot learning (FSL) aims to classify images under low-data regimes, where the conventional pooled global representation is likely to lose useful local characteristics. Recent work has achieved promising performances by using deep descriptors. They generally take all deep descriptors from neural networks into consideration while ignoring that some of them are useless in classification due to their limited receptive field, e.g., task-irrelevant descriptors could be misleading and multiple aggregative descriptors from background clutter could even overwhelm the object's presence. In this paper, we argue that a Mutual Nearest Neighbor (MNN) relation should be established to explicitly select the query descriptors that are most relevant to each task and discard less relevant ones from aggregative clutters in FSL. Specifically, we propose Discriminative Mutual Nearest Neighbor Neural Network (DMN4) for FSL. Extensive experiments demonstrate that our method not only qualitatively selects task-relevant descriptors but also quantitatively outperforms the existing state-of-the-arts by a large margin of 1.8~4.9% on fine-grained CUB, a considerable margin of 1.4~2.2% on both supervised and semi-supervised miniImagenet, and ~1.4% on challenging tieredimagenet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge